The first experiment in my project funded by Digital Innovation Lab at Stonehill College investigates the use of the preset patch Granular Freezer (designed by Critter and Guitari) to process audio . In this case the audio is coming from a lap steel guitar. In that I’m just getting used to both the Organelle and the EYESY, I will simply be improvising using a preset with the EYESY.

While describing his concept of stochastic music, composer Iannis Xenakis essentially also described the idea of granular synthesis in his book Formalized Music: Thought and Mathematics in Music . . .

“Other paths also led to the same stochastic crossroads . . . natural events such as the collision of hail or rain with hard surfaces, or the song of cicadas in a summer field. These sonic events are made out of thousands of isolated sounds; this multitude of sounds, seen as a totality, is a new sonic event. . . . Everyone has observed the sonic phenomena of a political crowd of dozens or hundreds of thousands of people. The human river shouts a slogan in a uniform rhythm. Then another slogan springs from the head of the demonstration; it spreads towards the tail, replacing the first. A wave of transition thus passes from the head to the tail. The clamor fills the city, and the inhibiting force of voice and rhythm reaches a climax. It is an event of great power and beauty in its ferocity. Then the impact between the demonstrators and the enemy occurs. The perfect rhythm of the last slogan breaks up in a huge cluster of chaotic shorts, which also spreads to the tail. Imagine, in addition, the reports of dozens of machine guns and the whistle of bullets adding their punctuations to this total disorder. The crowd is then rapidly dispersed, and after sonic and visual hell follows a detonating clam, full of despair, dust, and death.”

This passage by Xenakis goes into dark territory very quickly, but it is a scenario that harkened back to the composer’s past when he lost an eye during a conflict with occupying British tanks during the Greek Civil War.

Granular synthesis was theorized before it was possible. Its complexity requires computer technology that at the time it was theorized was not yet powerful enough. Granular synthesis takes an audio waveform, sample, or audio stream and chops it into grains and then resynthesizes the sound by combining them into clouds. The synthesist can often control aspects such as grain length, grain playback speed, cloud density, as well as others. Since the user can control playback speed of grains independently of the speed to which a sound travels through a group of grains, granular synthesis allows one to stretch or compress the timing of sound without affecting pitch, as well as being able to change the pitch of a sound without affecting the duration of a sound.

Granular Freezer is sort of a budget version of Mutable Instruments Clouds. I call it a budget version, as Clouds allows for the control of more than a half dozen parameters of the sound, while Granular Freezer gives the user control of four parameters, namely grain size, spread, cloud density, and wet / dry mix. These four parameters correspond to the four prominent knobs on the Organelle. Spread can be thought of as a window, that kind of functions like a delay time. In other words it is a window of past audio that the algorithm draws from to create grains. As you turn the spread down the sound starts to sound like an eccentric slap (short) delay. Wet / dry mix is a very standard parameter in audio processing. It is the ratio of processed sound to original sound that is sent to the output. The Aux button can be used to freeze the sound, that is hold the current spread in memory until the sound is unfroze. You may also use a pedal to trigger this function. One feature of the algorithm that I didn’t use was using the MIDI keyboard to transpose the audio.

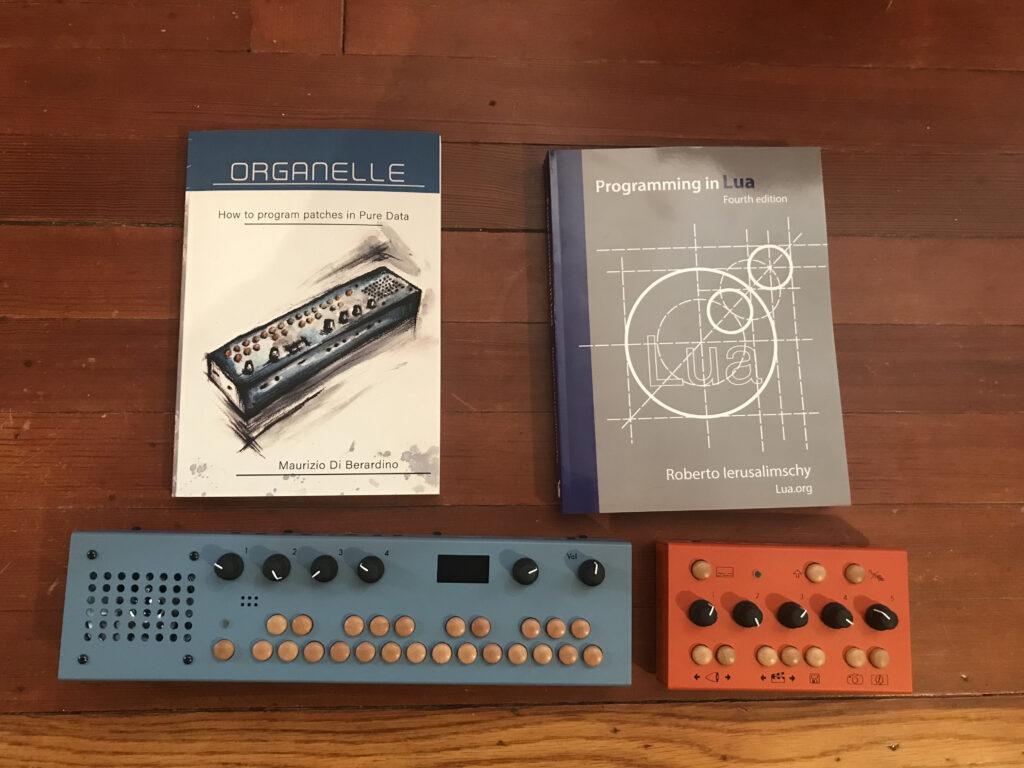

Since this is my first experiment with the Organelle, I’m not going to go through the algorithm in great detail, but I will give it an overview. All patches for the Organelle use a patch called a mother patch. The mother patch should never be altered. This controls input to and output from the Organelle. Such values are passed to and from the mother patch in order to avoid input and output errors. You can download the mother patch so you can develop instruments on a computer without having access to an Organelle. This is also a good way to check for coding errors before you upload a patch to your Organelle.

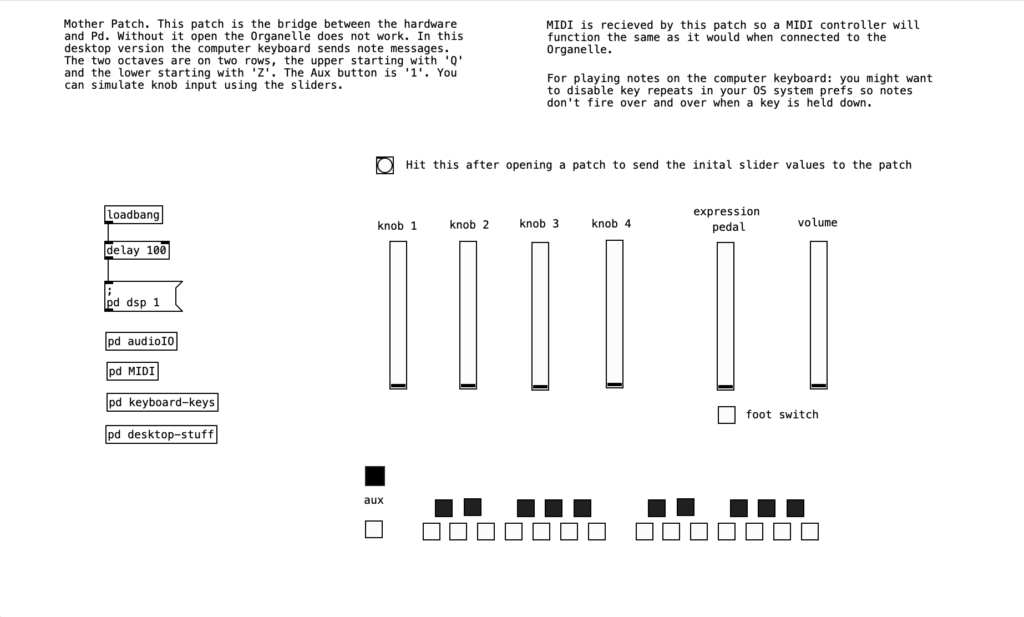

If we double click on the subroutine pd MIDI, we can see, amongst other things how the Organelle translates incoming MIDI controller data (controller numbers 21, 22, 23, & 24) to knob data . .

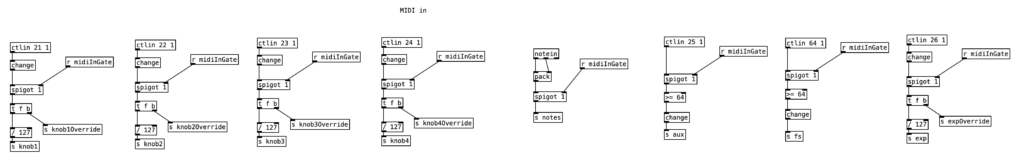

When we double click on pd audioIO, we can see how the mother patch handles incoming audio (left) as well as outgoing audio (right) . . .

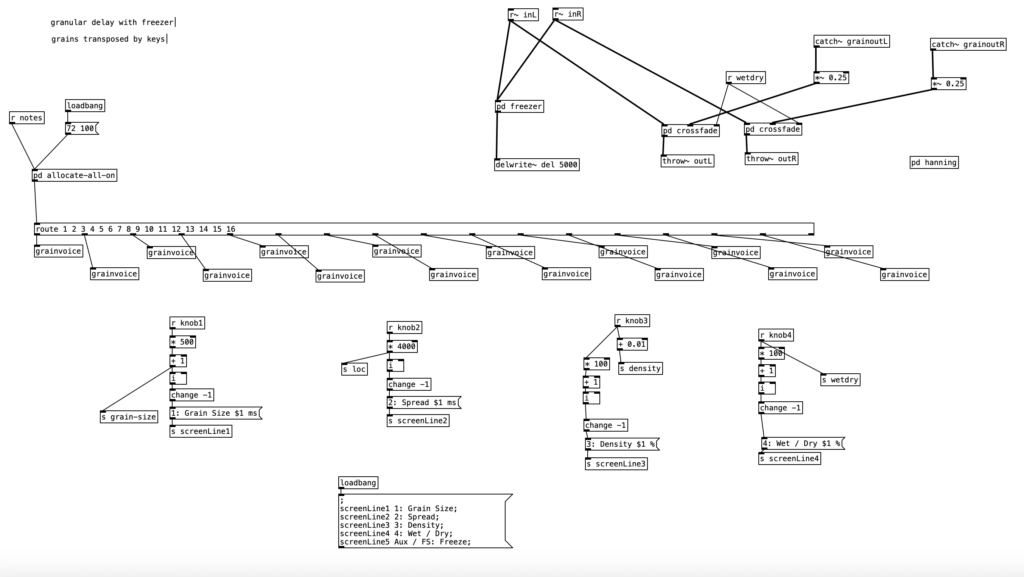

Any given Organelle patch typically consists of a main program (by convention, named main.pd), as well as a number of self contained subpatches called abstractions. In the case of Granular Freezer, in addition to the main program there are three abstrations: latch-notes.pd, grain.pd, and grainvoice.pd. Given the complexity of the program, I will only go over main.pd.

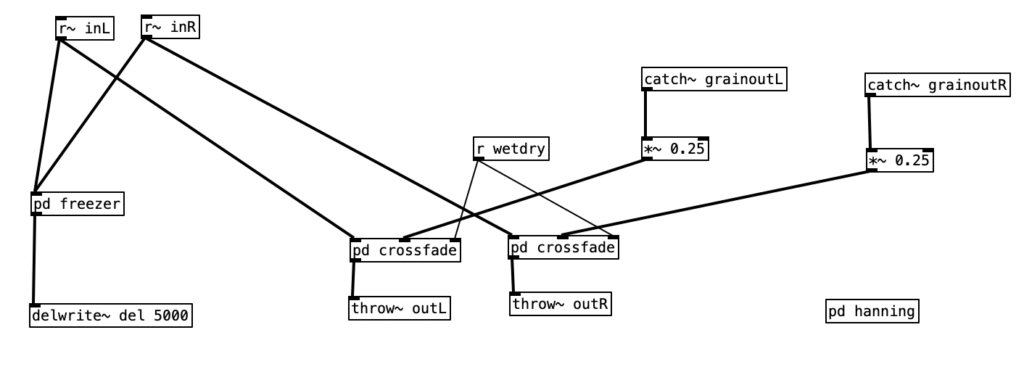

In the upper right hand quadrant we see the portion of the algorithm that handles input and output. It also controls the ability to freeze the audio (pd freezer). The objects catch~ grainoutL and catch~ grainoutR receive the granularly processed audio from grainvoice.pd. The object r wetdry receives data from knob 4, allowing the user to control the ratio of unprocessed sound to processed sound.

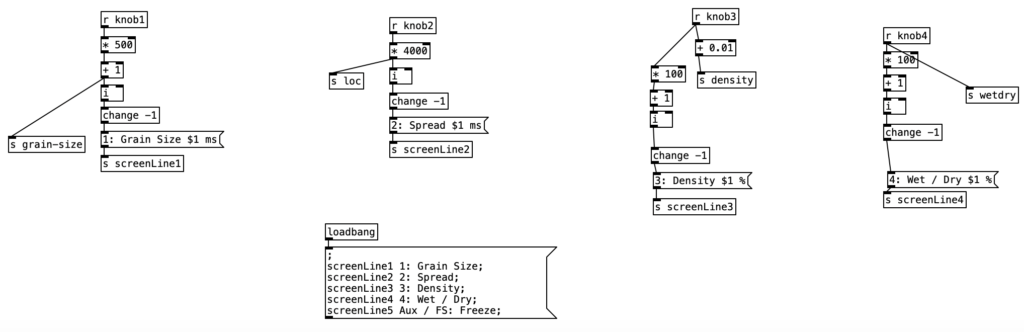

The lower half of main.pd sets the numerical range for each of the four knobs, sends the data to wherever it is needed (as was done with wetdry), and sets & sends the text to be displayed on the Organelle’s screen. This last item causes the function of each knob to be displayed along with its current status. In the message box at the bottom of the screen, we see a similar item related to the aux key screenLine5. The part of the program that updates the display for screenLine5 is in pd freezer.

In the improvisation I run a six string lap steel using C6 tuning through a volume pedal, and then into the Organelle. I also use a foot switch to trigger the freeze function on the patch. Musically, I spend the bulk of the time improvising over an E pedal provided by an eBow on the open low E string. A lot of the improvisation is devoted to arpeggiating Am, C#m, and Em chords on the upper three strings of the instrument. During the beginning of the improvisation the mix is at 80% (only 20% of the original sound). I quickly move to 100% mix. Near the end I move to sliding pitches with the eBow. During this portion of the improvisation I experiment with the spread, and there’s a portion where I move the spread close to its minimal amount, which yields that eccentric slap delay sound (starts around 8:28). As I move the spread towards its upper end, I can use it as a harmonizer, as the spread holds the previous pitch in its memory as I move onto a new harmonizing pitch. At the end (around 9:15) I move the grain size down to the bottom (1 millisecond), and then turn the cloud density down to the bottom to function as a fade out.

With the EYESY I simply improvised the video using the audio of the video recording I made. With the mode I was using the first two knobs seemed to control the horizontal and vertical configuration of the polygons. These controls allow you to position them in rows, or in a diamond lattice configuration. You can even use these controls to get the polygons to superimpose to an extent. The middle knob seems to increase the size of the polygons, making them overlap a considerable amount. The two knobs on the right control the colors for the polygons and the background respectively. I used these to start from a green on black configuration in honor of the Apple IIe computer.

The video below will allow you to watch the EYESY improvisation and the musical improvisation simultaneously . . .

As I mentioned earlier, I consider Granular Freezer to be a budget version of Clouds. I have the CalSynth clone of Clones, Monsoon, in a synthesizer rack I’m assembling. It is a wonderful, expressive instrument that creates wonderful sounds. While Granular Freezer is a more limited instrument, it is still capable of creating some wonderful sounds. There are already a couple variants of Granular Freezer posted on PatchStorage. As I get more familiar with programming for the Organelle, I should be able to create a version of the patch that allows for far greater control of parameters.

All in all, I feel this was a successful experiment. I should be able to reuse the audio for other musical projects. However, there was some room for improvement. There was a little bit of 60 cycle hum that was coming through the system. This was likely due to a cheap pickup in my lap steel. Likewise, it would be nice if I cleaned up my studio so it would make for a less cluttered YouTube video. As I mentioned earlier it would be good to add more parameters to the Granular Freezer patch. I used Zoom to capture the video created by the EYESY, and it added my name to the lower left hand corner of the screen. Next time I may try using QuickTime for video capture to avoid that issue. By the end of the summer I hope to develop some PureData algorithms to control the EYESY to perform the video in an automated fashion. See you all back here next month for the next installment.