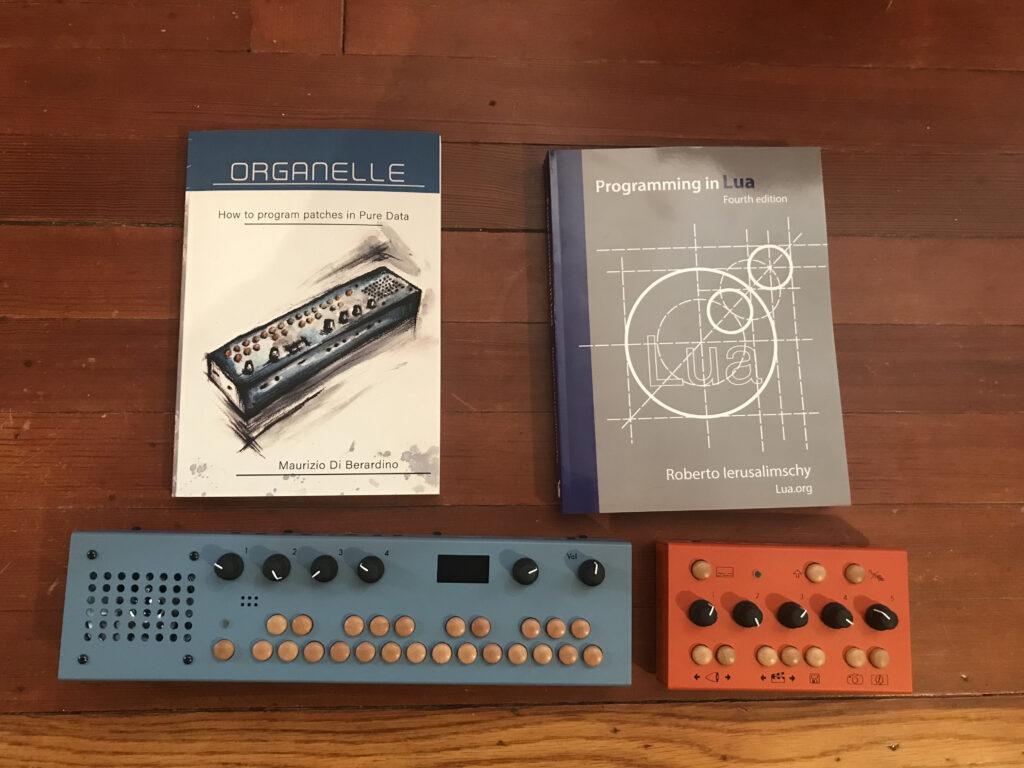

May has been a busy month for me. Thus, my second experiment in my project funded by Digital Innovation Lab at Stonehill College investigates the use of the preset patch Analog Style (designed by Critter and Guitari). To be specific, I am using a WARBL wind controller with EVI fingering to control the patch. I am using the breath control on the WARBL to control the cutoff frequency of Analog Style (using MIDI controller 24).

Due to the busyness of the end of the semester, this experiment features no original programming on the organelle. However, I did create a program in Pure Data to create accompaniment and drive the EYESY. To accompany the experiment, I used the H.E.A.P, the Housatonic Electronic Algorithmic Philharmonic. This a fun, frivolous name I’ve given to a small, battery powered synthesizer / sampler setup I’ve assembled for live performance. It consists of three synthesizers / samplers: a Volca Sample 2 (which provides 10 channels of late 1980s style lo fidelity digital sampling), a Volca Keys (which can be used as an analog monophonic or 3 note polyphonic synthesizer), and a Volca FM 2 (which is a clone of the 1980s classic, the Yamaha DX7, the best selling synthesizer of all time). I’ve also begun to think of the EYESY, as we’ll see later, as part of the H.E.A.P.

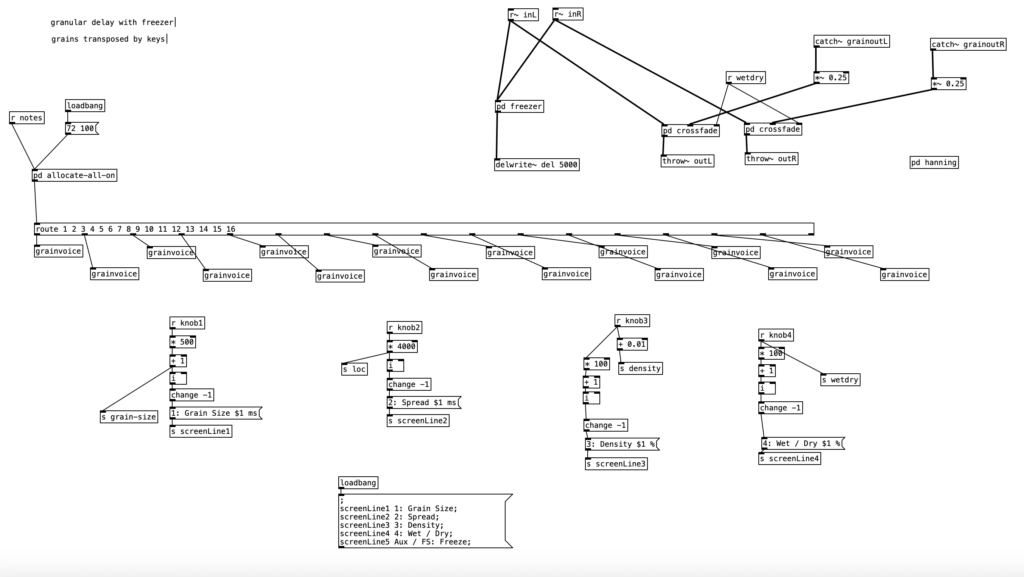

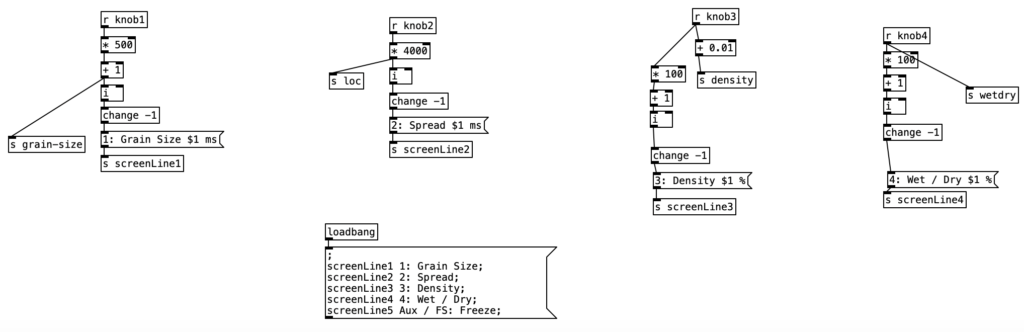

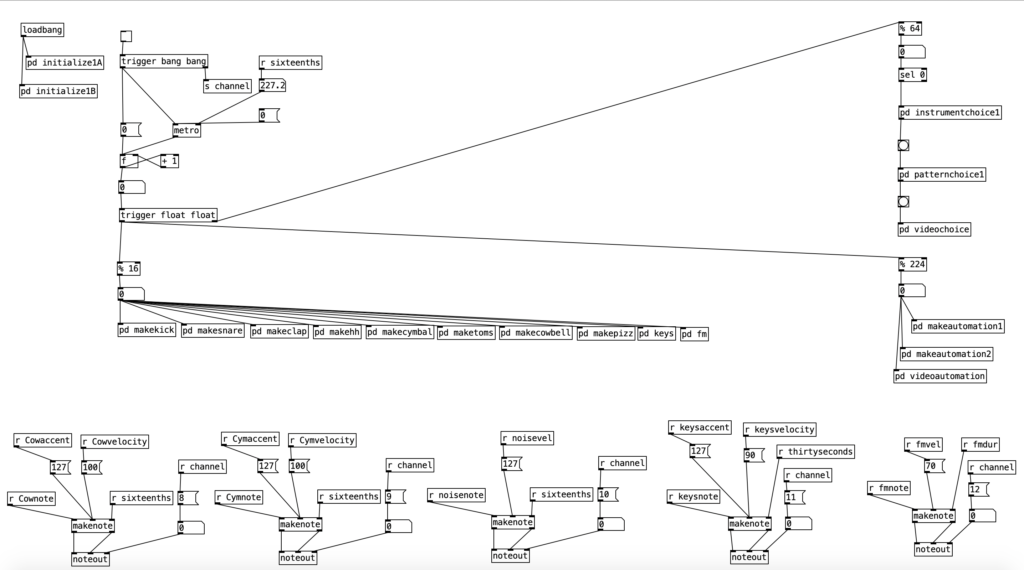

I won’t go into great detail about the program that generates the accompaniment for Experiment 2, as I have other blog posts that go into detail about various algorithms included in the program. Ultimately, the program is intended to generate relatively generic, but fairly usable R&B esque slow jams. The music is in common time using sixteenth notes. The portion of the program including and beneath % 16 (mod 16) ensures that the resulting music will have 16 pulses per measure. Likewise, the instrumentation and musical patterns change every four measures. This is enabled by the part of the program including and beneath % 64 (four measures of sixteenth notes adds up to 64).

The Volca Sample is being used to provide the drum beat, and some string pizzicato (see pd makepizz). The Volca Keys provides some synthesized bass patterns that run two measure loops. The Volca FM provides four chord, four note chord progressions that repeat every two measures. To create these chord progressions I used some music programming techniques that I’ve covered in a previous blog entry, though in this experiment I am use the brass friendly key of G minor.

One of the newer programming tricks I used in this program is an algorithm designed to drive the EYESY. While the EYESY generates hypnotic, interactive video animations, left to its own devices it can get a bit repetitive fairly quickly. In order to generate anything that seems even remotely dynamic some one needs to perform the EYESY by rotating its five knobs. This is an impossibility for any performing musician, save for a vocalist. Thankfully, we can do the equivalent of turning the knobs on the EYESY through MIDI using controllers 21 through 25.

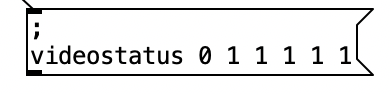

The algorithm I’ve created to drive the EYESY is designed to make slow, evolving changes. To control these changes I’ve created a table called videostatus. It consists of five positions that contain a one or a zero to denote whether changes should be make or not made to a given knob during the current four measure phrase.

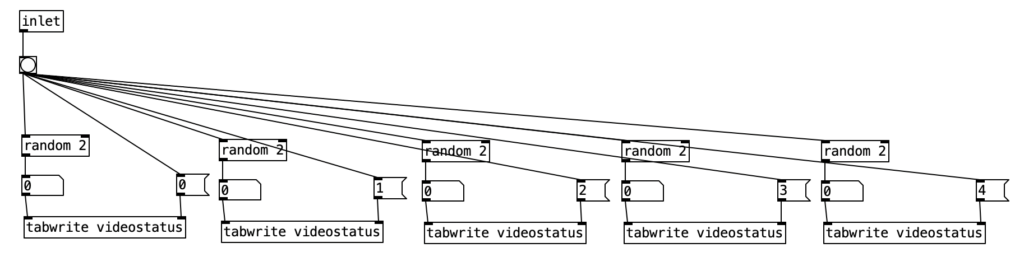

The subroutine pd videochoice is triggered at the beginning of every four measure phrase. It generates five different random numbers that are either a zero or a one. These results are then stored in the table videostatus.

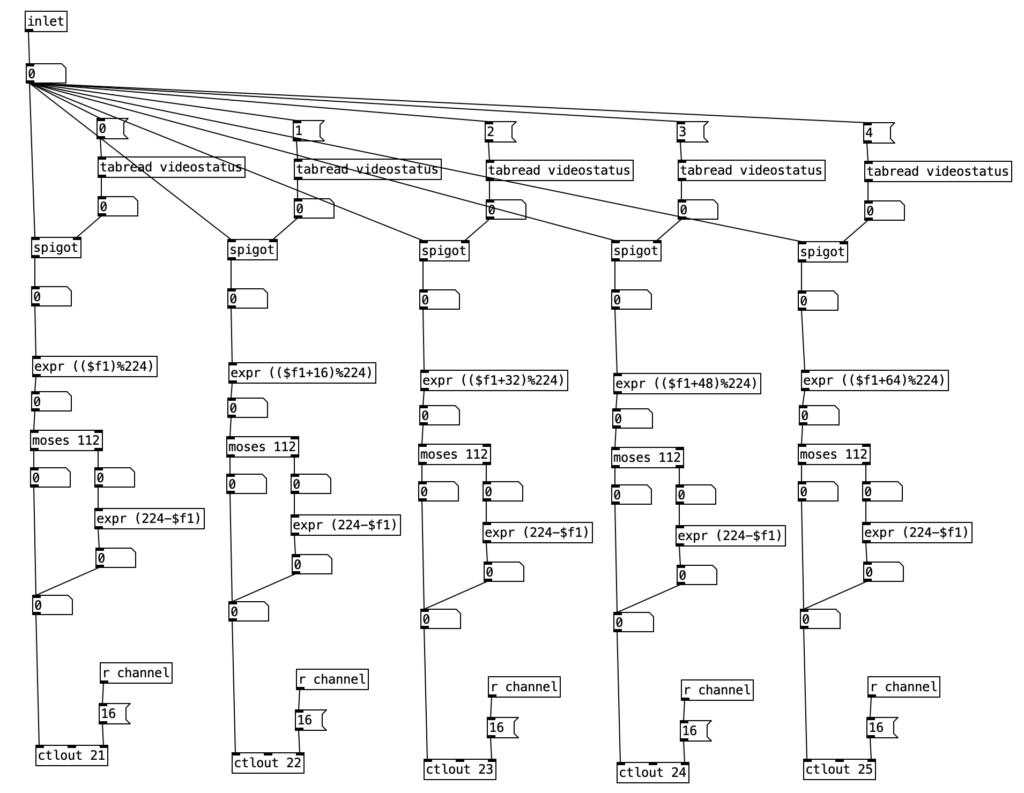

The subroutine pd videoautomation updates the knob positions on the EYESY once every sixteenth note. It is passed the current sixteenth note number modded by the number 224, which corresponds to 14 measures of sixteenth notes. The subroutine contains five nearly identical columns, each of which corresponds to each of the five knobs on the EYESY. First the algorithm checks the current states of each of the five positions of videostatus table. When that value is one, that allows the current sixteenth note number to pass through the spigot. This value is passed through an expr statement that displaces the sixteenth note number. The column corresponding to knob one is not displaced, but each subsequent column is displaced by one measure (16 sixteenths), which is then modded to stay between 0 and 224. The statement moses 112 is used to determine whether we should be counting up to 112, or counting down to 0. This is accomplished by having numbers that are greater than 112 to pass through expr (224-$f1), which causes the result to get small as the input value is increased. The result of this is then passed to one of the five controller values (21-25) on MIDI channel 16 (the channel I’ve set my EYESY to).

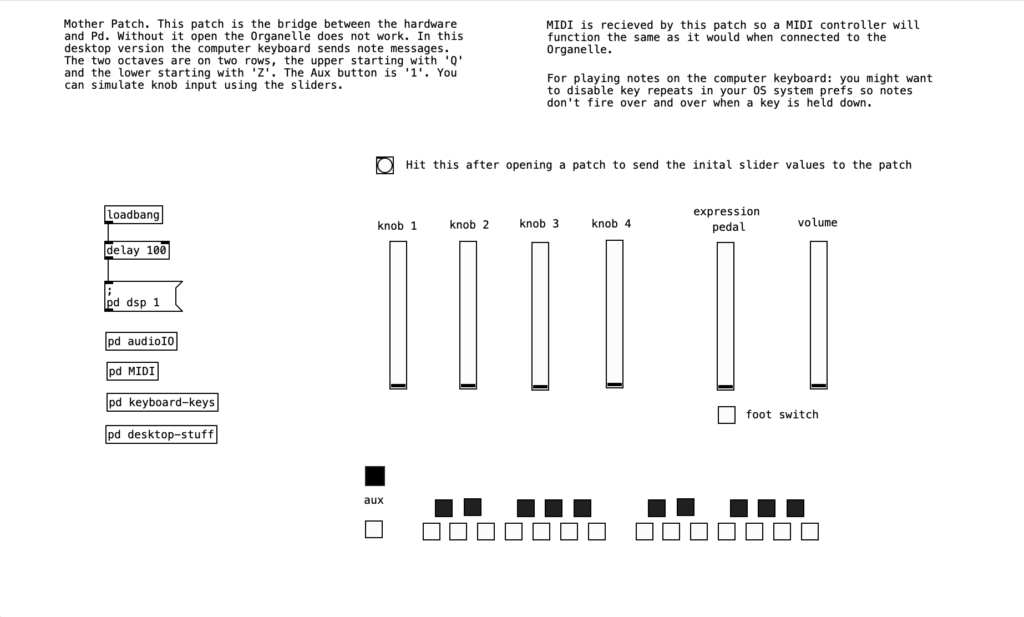

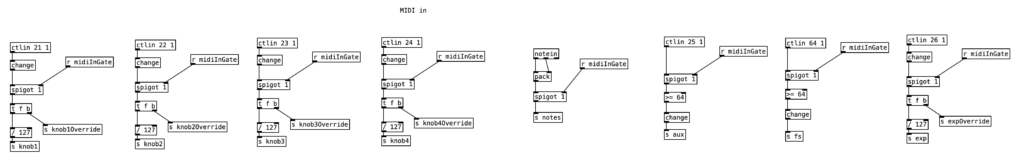

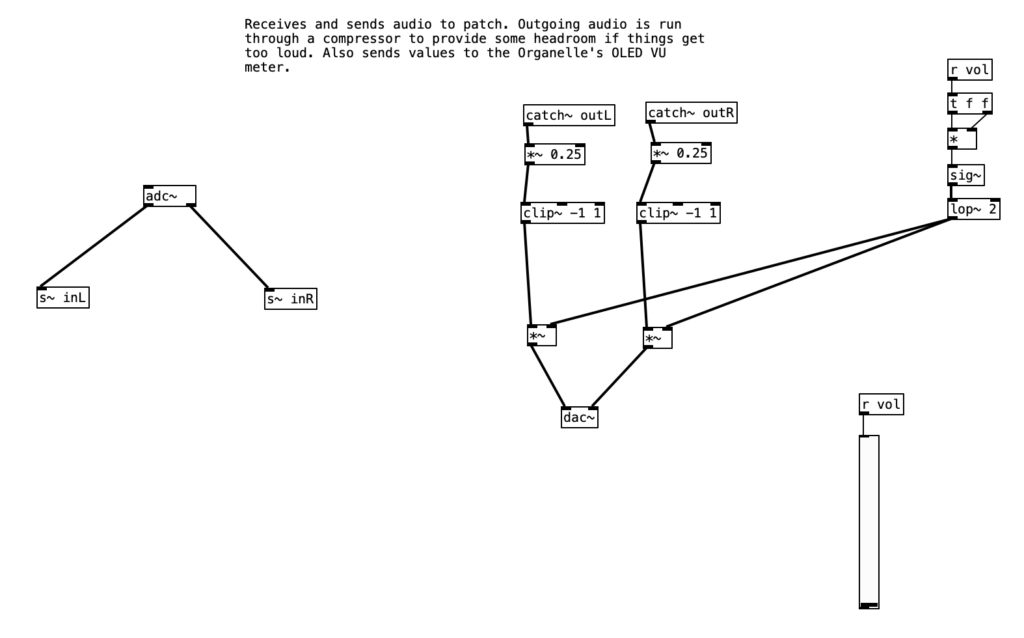

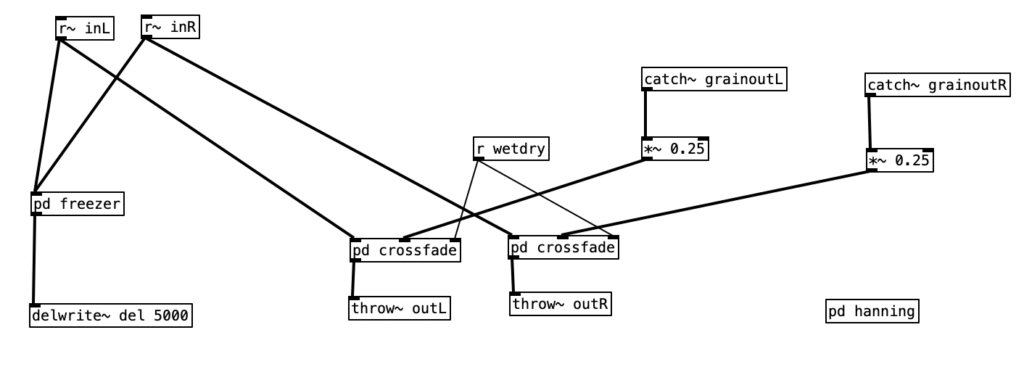

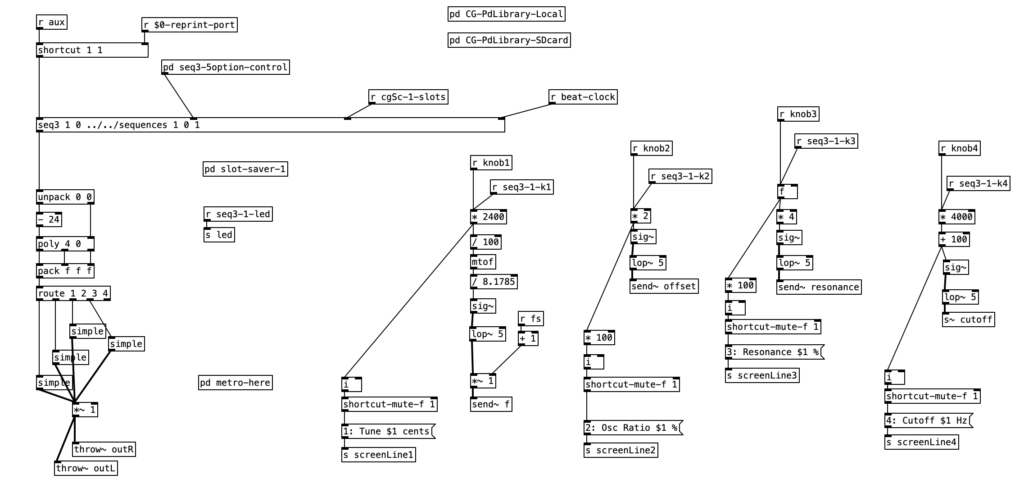

Since I went over the mother patch in the previous experiment, I’ll start with going over main.pd for the Analog Style patch. We can see that knob one controls the tuning of the patch, while knob two creates an offset frequency for a second oscillator. The third knob sets the resonance of the low pass filter, while the fourth knob (the one I am controlling using the WARBL) sets the cutoff frequency of filter. To learn more about what low pass filters are, check out my blog entry on Low Pass Filters in LogicPro. However, to summarize briefly in relationship to the WARBL, when the amount of breath coming through is low, that in turn sets the cutoff frequency to be low as well, resulting in less sound (and only low frequency sound) to come out of the Organelle.

We can also see that this patch allows for sequencing when the aux button is down. However we will not go through how sequencing works today. We will however go into simple, which is the subroutine that creates the sound. We can see two oscillators, blsaw, in this subroutine that generate sawtooth waves. For more information on subtractive synthesis waveforms (including sawtooth waves), check out my blog entry on Subtractive Synthesis Waveforms in Logic Pro. One of those two blsaw oscillators is modified by the offset of knob two. The mixture of these two oscillators is passed to a low pass filter, moog~. This object also receives a center frequency to its center inlet, and a resonance value to its right most inlet. The outlet of this object is then attenuated slightly, *~ .75, before being sent to the subroutine’s outlet.

Again, I’ve found that the accompaniment generated by experiment.pd to be generic, but also fairly usable. It should be relatively easy to change the tempo, phrase length, or any number of musical patterns to create music that is stylistically different. Also, I enjoy slow evolving nature of EYESY generated video. I feel that turning on and off changes to various combinations of the five knobs add a degree of subtlety that aid in the dynamic nature of the video.

I am disappointed in my performance on the WARBL. I am still getting used to the EVI fingering on the instrument, so there are some very sour notes from time to time. However, I am very pleased with the range of the WARBL, as well as the subtle breath control the instrument provides. The fingering makes jumping octaves and fifths very easy. In future experiments I hope to get into hacking existing Organelle patches. I also plan to come up with variants of the videoautomation algorithm to create more sudden, less subtle changes to the EYESY’s settings.