February was a very busy month for me for family reasons, and it’ll likely be that way for a few months. Accordingly, I’m a bit late on my February experiment, and will likely be equally late with my final experiment as well. I have also stuck with programming for the EYESY, as I have kind of been on a roll in terms of coming up with ideas for it.

This month I created twelve programs for the EYESY, each of which displays a different constellation from the zodiac. I’ve named the series Constellations and have uploaded them to patchstorage. Each one works in exactly the same manner, so we’ll only look at the simplest one, Aries. The more complicated programs simply have more points and lines in them with different coordinates and configurations, but are otherwise are identical.

Honestly, one of the most surprising challenges of this experiment way trying to figure out if there’s any consensus for a given constellation. Many of the constellations are fairly standardized, however others are fairly contested in terms of which stars are a part of the constellation. When there were variants to choose from I looked for consensus, but at times also took aesthetics into account. In particular I valued a balance between something that would look enticing and a reasonable number of points.

I printed images of each of the constellations, and traced them onto graph paper using a light box. I then wrote out the coordinates for each point, and then scaled them to fit in a 1280×720 resolution screen, offsetting the coordinates such that the image would be centered. These coordinates then formed the basis of the program.

import os

import pygame

import time

import random

import math

def setup(screen, etc):

pass

def draw(screen, etc):

linewidth = int (1+(etc.knob4)*10)

etc.color_picker_bg(etc.knob5)

offset=(280*etc.knob1)-140

scale=5+(140*(etc.knob3))

r = int (abs (100 * (etc.audio_in[0]/33000)))

g = int (abs (100 * (etc.audio_in[1]/33000)))

b = int (abs (100 * (etc.audio_in[2]/33000)))

if r>50:

rscale=-5

else:

rscale=5

if g>50:

gscale=-5

else:

gscale=5

if b>50:

bscale=-5

else:

bscale=5

j=int (1+(8*etc.knob2))

for i in range(j):

AX=int (offset+45+(scale*(etc.audio_in[(i*8)]/33000)))

AY=int (offset+45+(scale*(etc.audio_in[(i*8)+1]/33000)))

BX=int (offset+885+(scale*(etc.audio_in[(i*8)+2]/33000)))

BY=int (offset+325+(scale*(etc.audio_in[(i*8)+3]/33000)))

CX=int (offset+1165+(scale*(etc.audio_in[(i*8)+4]/33000)))

CY=int (offset+535+(scale*(etc.audio_in[(i*8)+5]/33000)))

DX=int (offset+1235+(scale*(etc.audio_in[(i*8)+6]/33000)))

DY=int (offset+675+(scale*(etc.audio_in[(i*8)+7]/33000)))

r = r+rscale

g = g+gscale

b = b+bscale

thecolor=pygame.Color(r,g,b)

pygame.draw.line(screen, thecolor, (AX,AY), (BX, BY), linewidth)

pygame.draw.line(screen, thecolor, (BX,BY), (CX, CY), linewidth)

pygame.draw.line(screen, thecolor, (CX,CY), (DX, DY), linewidth)In these programs knob 1 is used to offset the image. Since only one offset is used, rotating the knob moves the image on a diagonal moving from upper left to lower right. The second knob is used to control the number of superimposed versions of the given constellation. The scale of how much the image can vary is controlled by knob 3. Knob 4 controls the line width, and the final knob controls the background color.

The new element in terms of programing is a for statement. Namely, I use for i in range (j) to create several superimposed versions of the same constellation. As previously stated, the amount of these is controlled by knob 2, using the code j=int (1+(8*etc.knob2)). This allows for anywhere from 1 to 8 superimposed images.

Inside this loop, each point is offset and scaled in relationship to audio data. We can see for any given point the value is added to the offset. Then the scale value is multiplied by data from etc.audio_in. Using different values within this array allows for each point in the constellation to react differently. Using the variable i within the array also allows for differences between the points in each of the superimposed versions. The variable scale is always set to be at least 5, allowing for some amount of wiggle given all circumstances.

Originally I had used data from etc.audio_in inside the loop to set the color of the lines. This resulted in drastically different colors for each of the superimposed constellations in a given frame. I decided to tone this down a bit, by using etc.audio_in data before the loop started allowing each version of the constellation within a given frame to be largely the same color. That being said, to create some visual interest, I use rscale, gscale, and bscale to move the color in a direction for each superimposed version. Since the maximum amount of superimposed images is 8, I used the value 5 to increment the red, green, and blue values of the color. When the original red, green, or blue value was less than 50 I used 5, which moves the value up in value. When the original red, green, or blue value was more than 50 I used -5, which moves the value down in value. The program chooses between 5 and -5 using if :else statements.

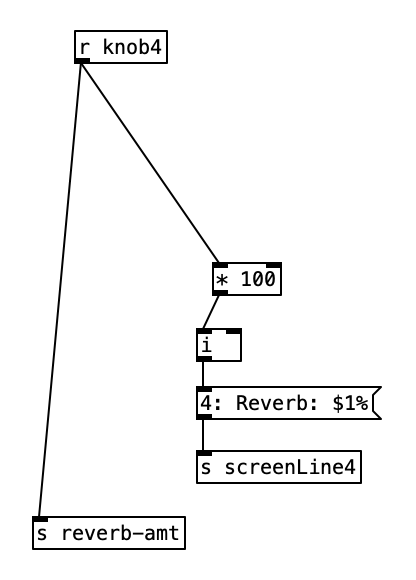

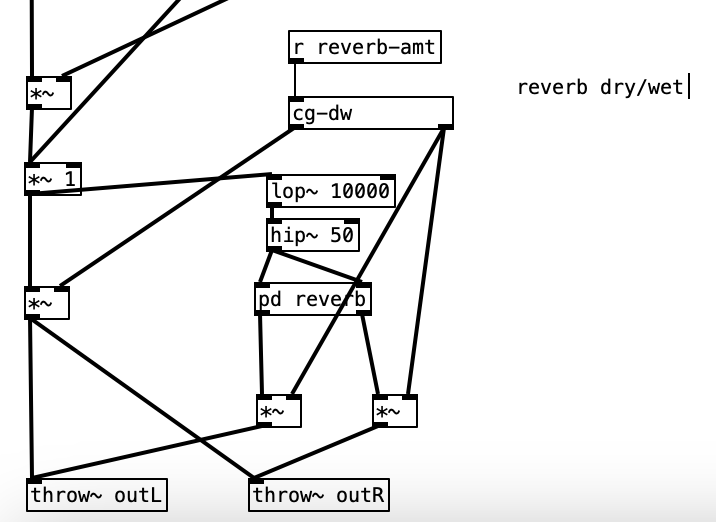

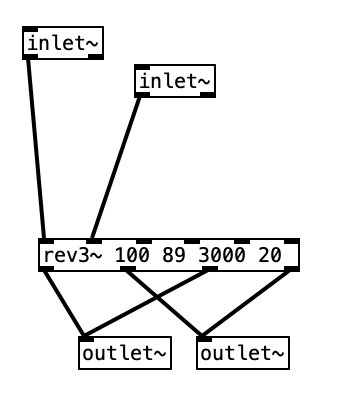

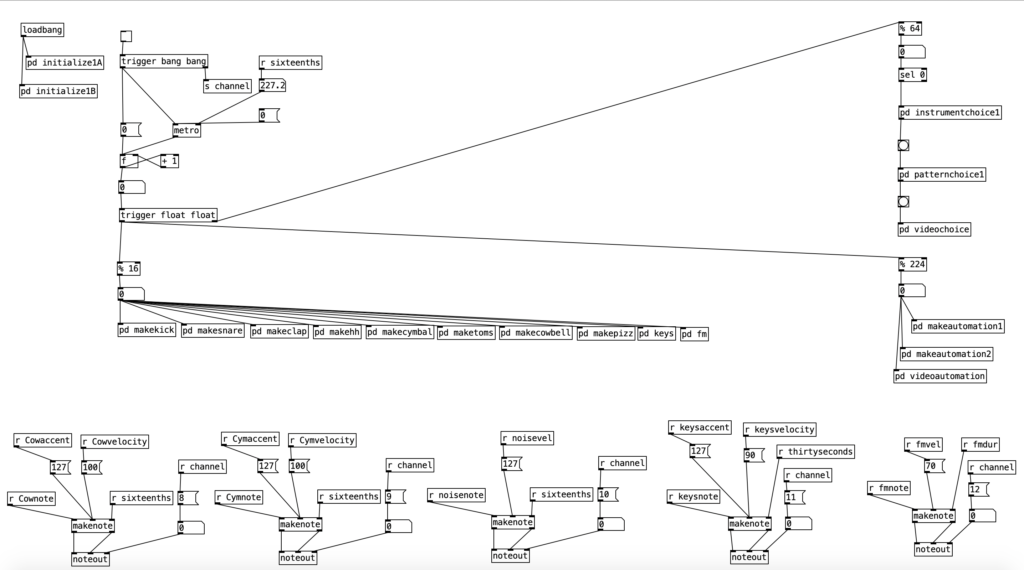

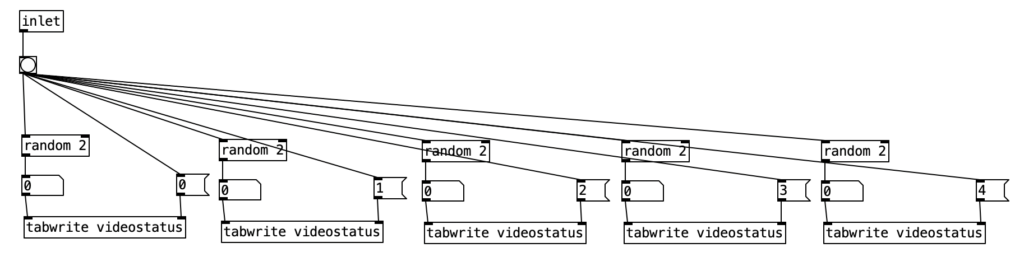

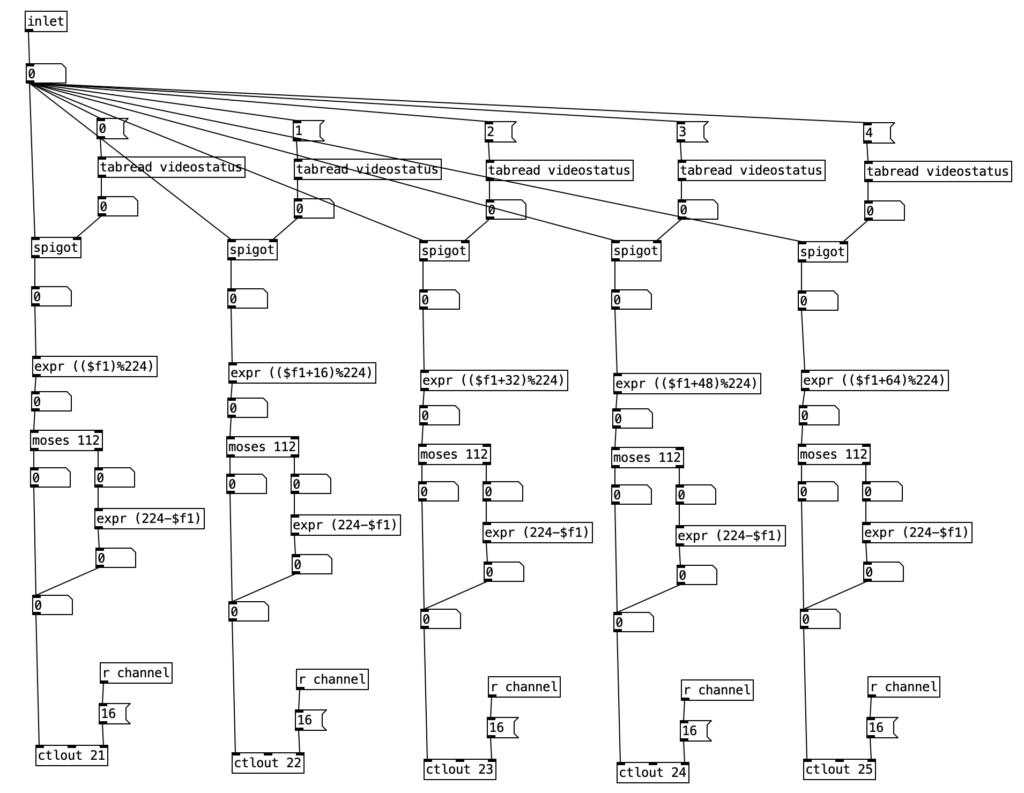

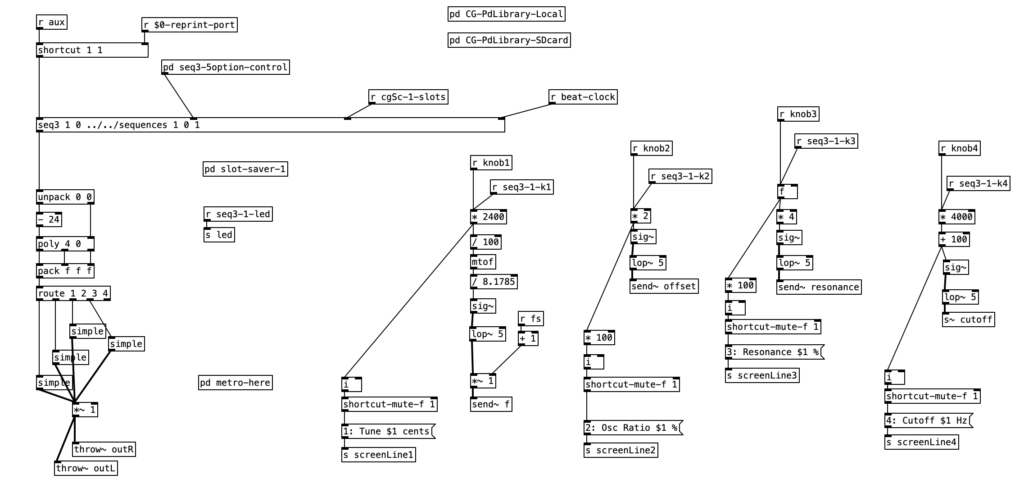

The music used in the example videos are algorithms that will be used to generate accompaniment for a third of my next major studio album. These algorithms grew directly out of my work on these experiments. I did add one little bit of code the these puredata algorithms however. Since I have 6 musical examples, but 12 EYESY patches, I added a bit of code that randomly chooses between 1 of 2 EYESY patches and sends out a program (patch) change to the EYESY on MIDI channel 16 at the beginning of each phrase.

While I may not use these algorithms for the videos for the next studio album, I will likely use them in live performances. I plan on doing a related set of EYESY programs for my final experiment next month.

Experiment 11A: Aries & Taurus:

Experiment 11B: Gemini & Cancer:

Experiment 11C: Leo & Virgo:

Experiment 11D: Libra & Scorpio:

Experiment 11E: Sagittarius & Capricorn:

Experiment 11F: Aquarius & Pisces: