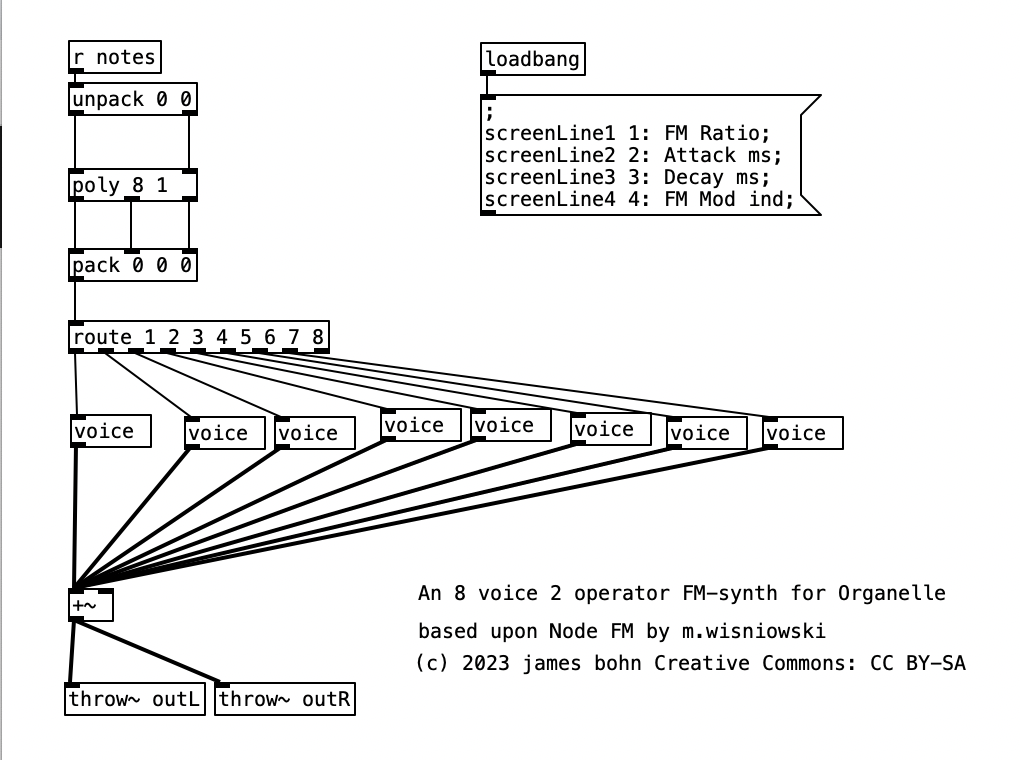

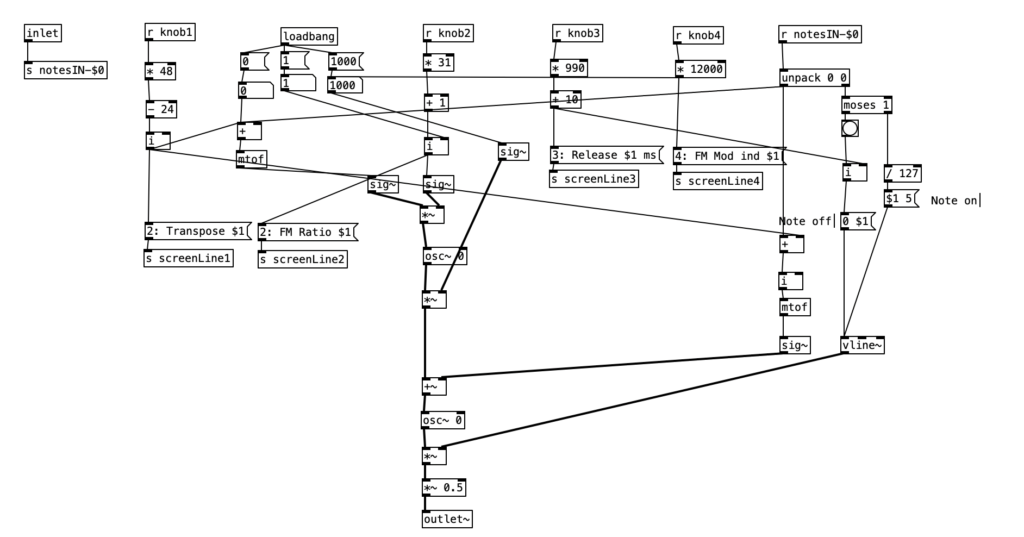

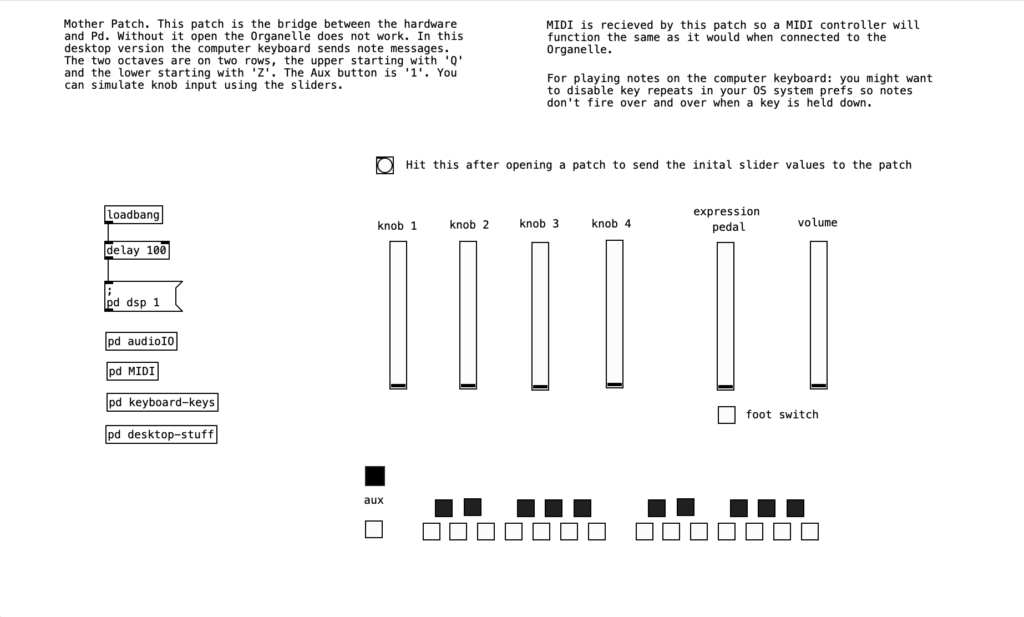

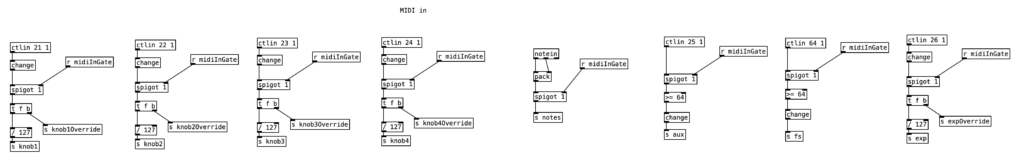

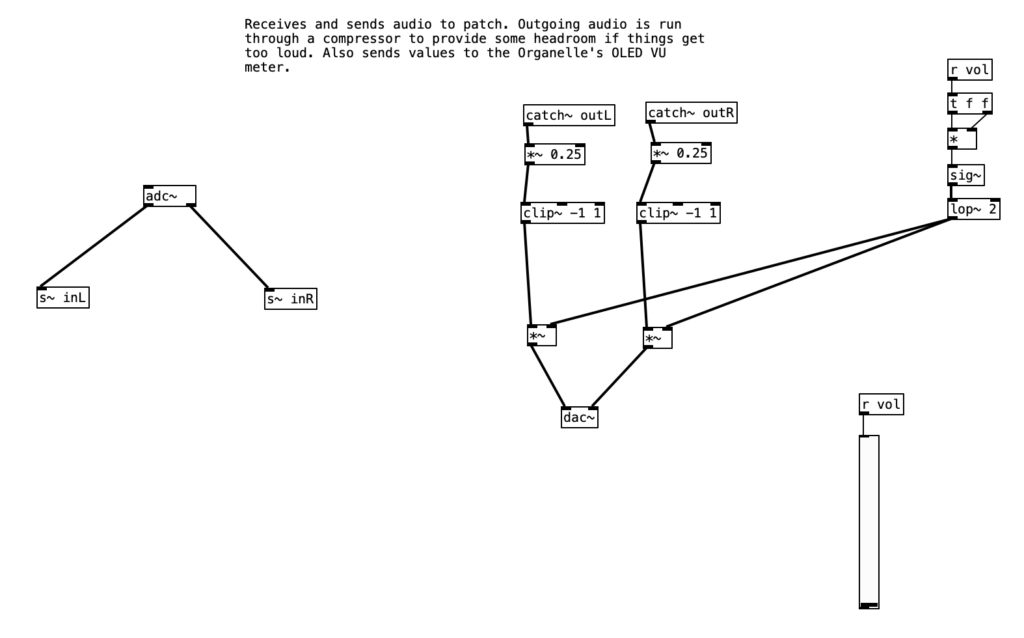

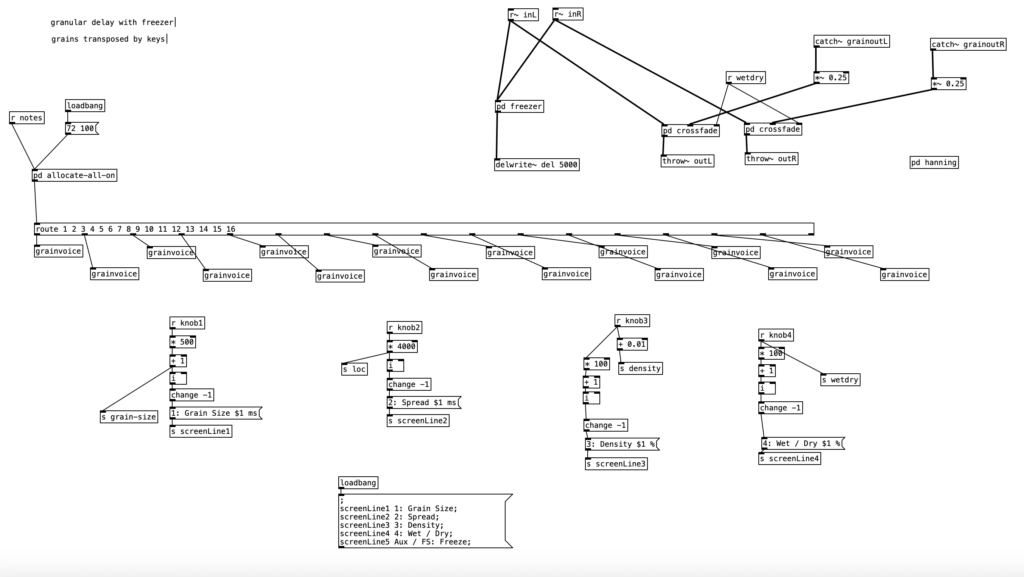

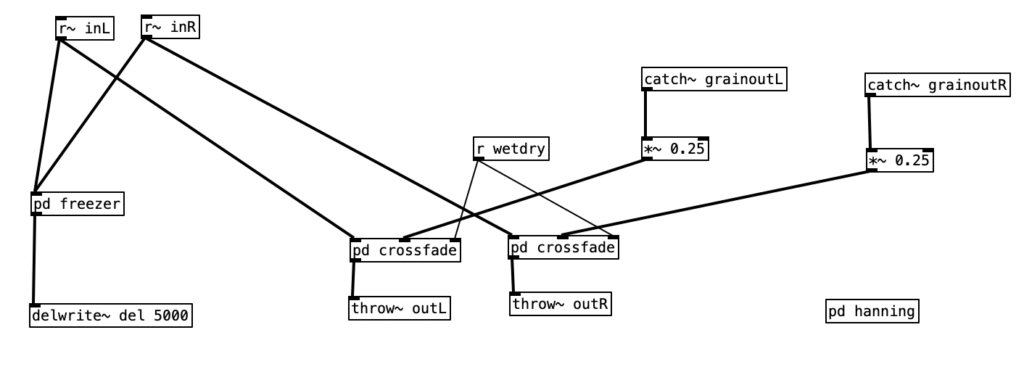

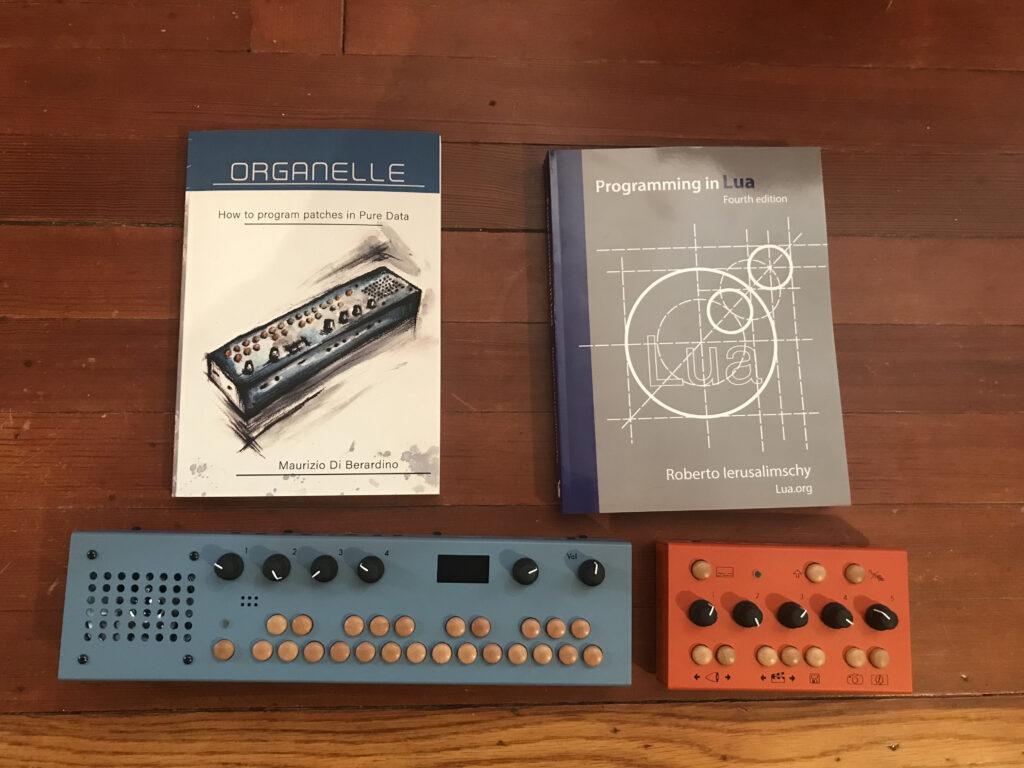

This month’s experiment is a considerable step forward on three fronts: Organelle programming, EYESY programming, and computer assisted composition. In terms of Organelle programming, rather than taking a pre-existing algorithm and altering it (or hacking it) to do something different, I decided to create a patch from scratch. I first created it in PureData, and then reprogrammed it to work specifically with the Organelle. Creating it in PureData first meant that I used horizontal sliders to represent the knobs, and that I sent the output to the DAC (digital to analog converter). When coding for the Organelle, you use a throw~ object to output the audio.

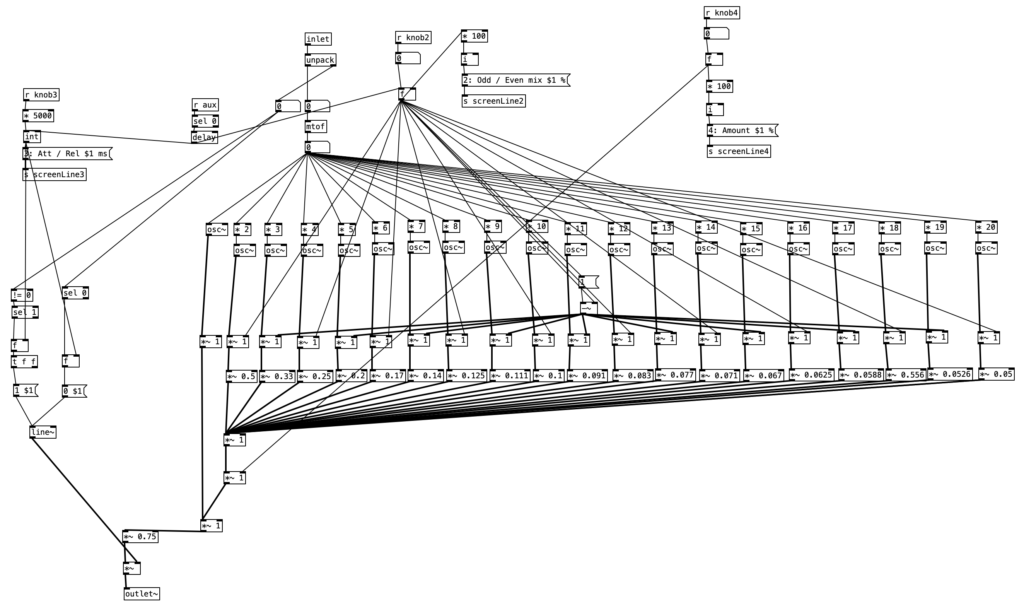

The patch I wrote, Wavetable Sampler, reimagines a digital sampler, and in doing so, basically reinvents wavetable synthesis. The conventional approach to sampling is to travel through the sound in a linear fashion from beginning to end. The speed at which we travel through the sound determines both its length and its pitch, that is faster translates to shorter and higher pitched, while slower means longer and lower pitched.

I wanted to try using an LFO (low frequency oscillator) to control where we are in a given sample. Using this technique the sound would go back and forth between two points in the sample continuously. In my programming I quickly learned that two parameters are strongly linked, namely the frequency of this oscillator and the amplitude of the oscillator, which becomes the distance travelled in the sample. If you want the sample to be played at a normal speed, that is we recognize the original sample, those two values need to be proportional. To describe this simply, a low frequency would require the sample to travel farther while a higher frequency would need a small amount of space. Thus, we see the object expr (44100 / ($f1)), with the number 44,100 being the sample rate of the Organelle. Dividing the sample rate by the frequency of the oscillator yields the number of samples that make up a cycle of sound at that frequency.

Obviously, a user might want to specifically move at a different rate than what would be normal. However, making that a separate control prevents the user from having to mentally calculate what would be an appropriate sample increment to have the sample play back at normal speed. I also specified that a user will want to control where we are in a much longer sample. For instance, the sample I am using with this instrument is quite long. It is an electronic cello improvisation I did recently that lasts over four minutes.

The sound I got out of this instrument was largely what I expected. However, there was one aspect that stood out more than I thought it would. I am using a sine wave oscillator in the patch. This means that the sound travels quickly during the rise and fall portion of the waveform, but as it approaches the peak and trough of the waveform it slows down quite dramatically. At low frequencies this results in extreme pitch changes. I could easily have solved this issue by switching to a triangle waveform, as speed would be constant using such a waveform. However, I decided that the oddness of these pitch changes were the feature of the patch, and not the bug.

While I intended the instrument to be used at low frequencies, I found that it was actually far more practical and conventionally useful at audio frequencies. Human hearing starts around 20Hz, which means if you were able to clap your hands 20 times in a second you would begin to hear it as a very low pitch rather than a series of individual claps. One peculiarity of sound synthesis is that if you repeat a series of samples, no mater how random they may be, at a frequency that lies within human hearing, you will hear it as a pitch that has that frequency. The timbre between two different sets of samples at the same frequency may vary greatly, but we will hear them as being, more or less, the same pitch.

Thus, as we move the frequency of the oscillator up into the audio range, it turns into somewhat of a wavetable synthesizer. While wavetable synthesis was created in 1958, it didn’t really exist in its full form until 1979. At this point in the history of synthesis it was an alternative to FM synthesis, which could offer robust sound possibilities but was very difficult to program, and digital sampling, which could recreate any sound that could be recorded but was extremely expensive due to the cost of memory. In this sense wavetable synthesis is a data saving tool. If you imagine a ten second recording of a piano key being struck and held, the timbre of that sound changes dramatically over those ten seconds, but ten seconds of sampling time in 1980 was very expensive. Imagine if instead we can digitize individual waveforms at five different locations in the ten second sample, we can then gradually interpolate between those five waveforms to create a believable (in 1980) approximation of the original sample. That being said, wavetable synthesis also created a rich, interesting approach to synthesizing new sounds such that the technique is still somewhat commonly used today.

When we move the oscillator for Wavetable Sampler into the audio range, we are essentially creating a wavetable. The parameter that effects how far the oscillator travels through the sample creates a very interesting phenomenon at audio rates. When that value is very low, the sample values vary very little. This results in waveforms that approach a sine wave in their simplicity and spectrum. As this value increases more values are included, which may differ greatly from each other. This translates into adding harmonics into the spectrum. Which harmonics are added are dependent up the wavetable, or snippet of a sample, in question. However, as we turn up the value, it tends to add harmonics from lower ones to higher ones. At extreme values, in this case ten times a normal sample increment, the pitch of the fundamental frequency starts to be over taken by the predominant frequencies in that wavetable’s spectrum. One final element of interest with the construction of the instrument in relation to wavetable synthesis is related to the use of a sine wave for the oscillator. Since the rate of change speeds up during the rise and fall portion of the waveform and slows down near the peak and the valley of the wave, that means there are portions of the waveform that rich in change while other portions where the rate of change is slow.

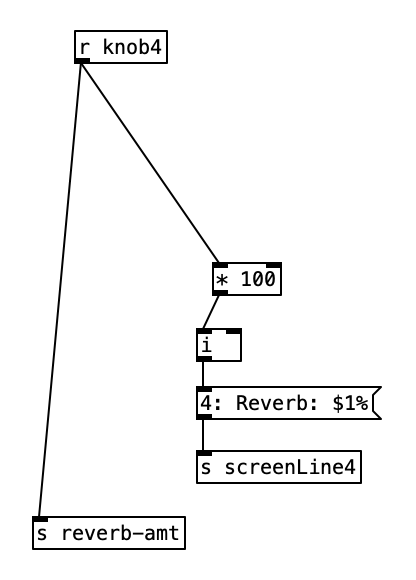

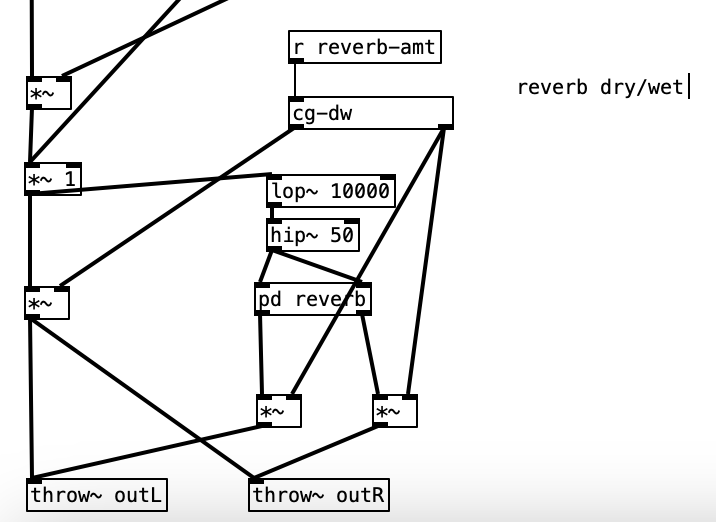

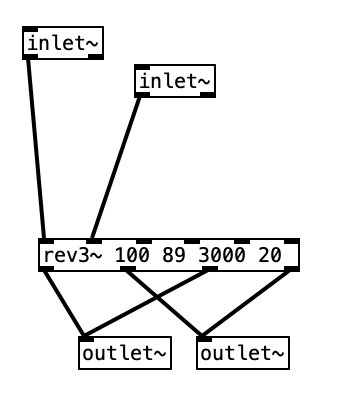

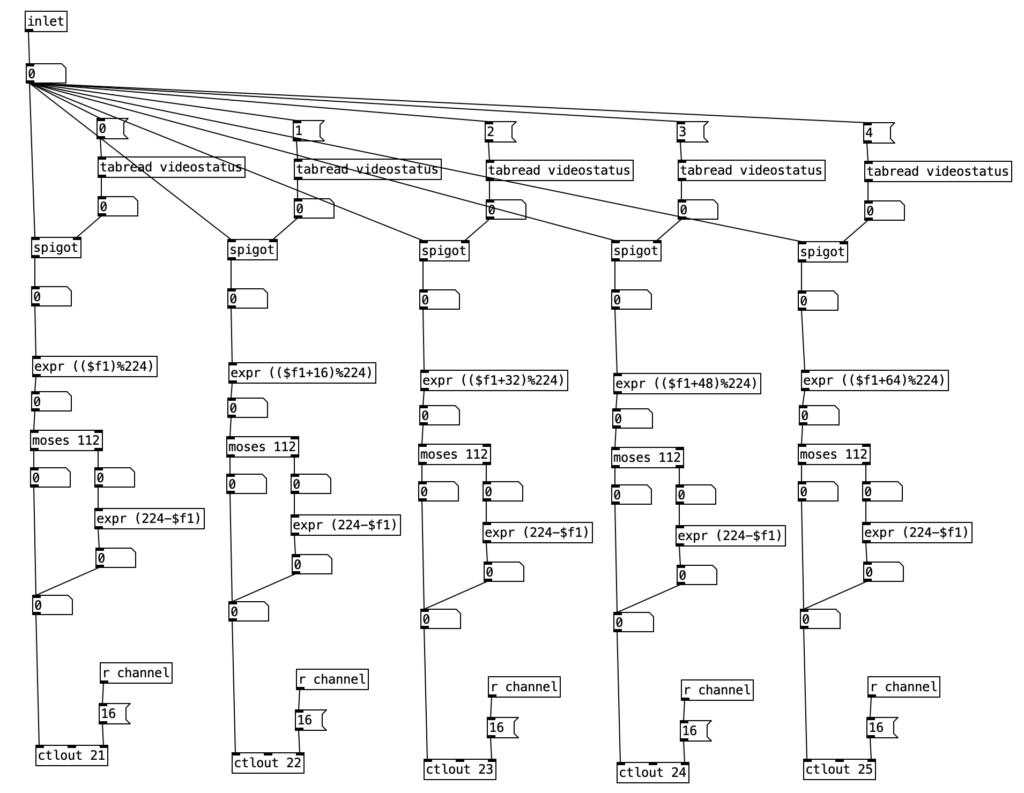

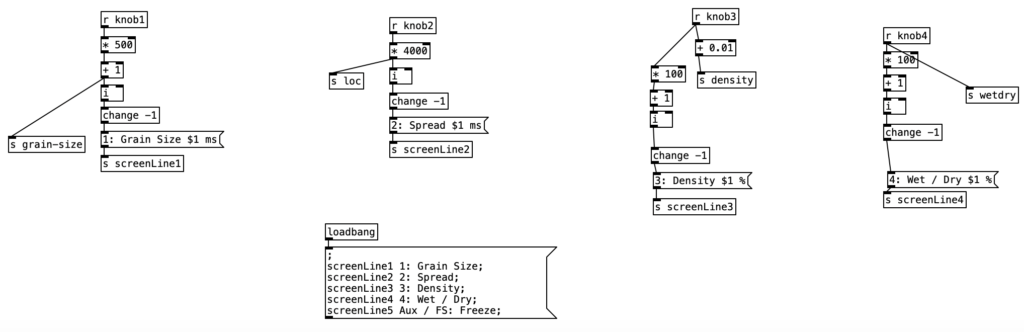

Since the value that the oscillator travels seems to be analogous to increasing the harmonic spectrum, I decided to put that on knob four, as that is the knob I have been controlling via breath pressure with the WARBL. On the Organelle I set knob one to set the index of where we are in the four minute plus sample. The frequency of the oscillator is set by the key that is played, but I use the second knob as a multiplier of this value. This knob is scaled from .001, which will yield a true low frequency oscillator, to 1, which will be at the pitch played (.5 will be down an octave, .25 will be down two octaves, etc.). As stated earlier, the fourth knob is used to modify the amplitude of the oscillator, affecting the range of samples that will be cycled through. This left the third knob unused, so I decided to use that as a decay setting.

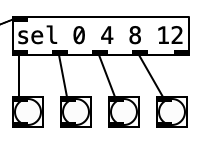

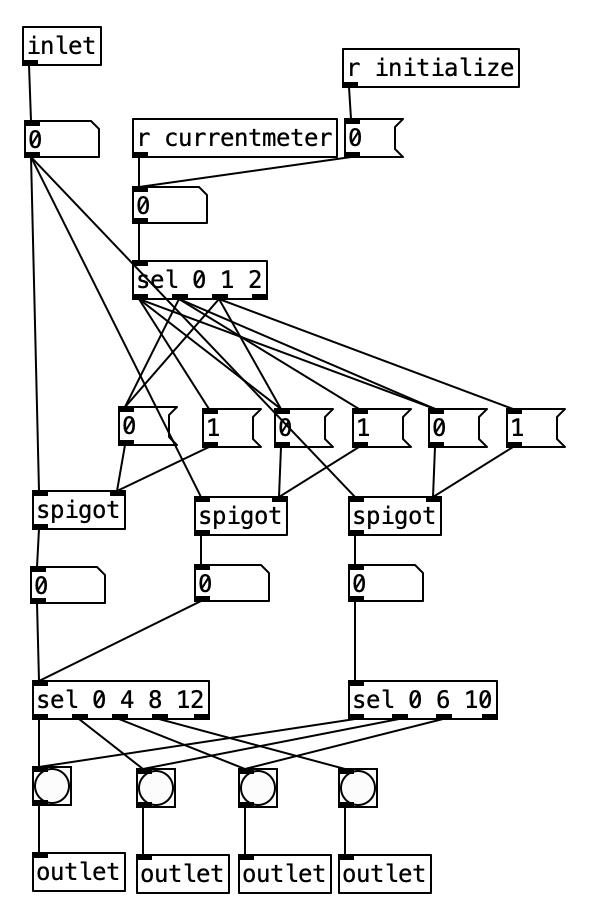

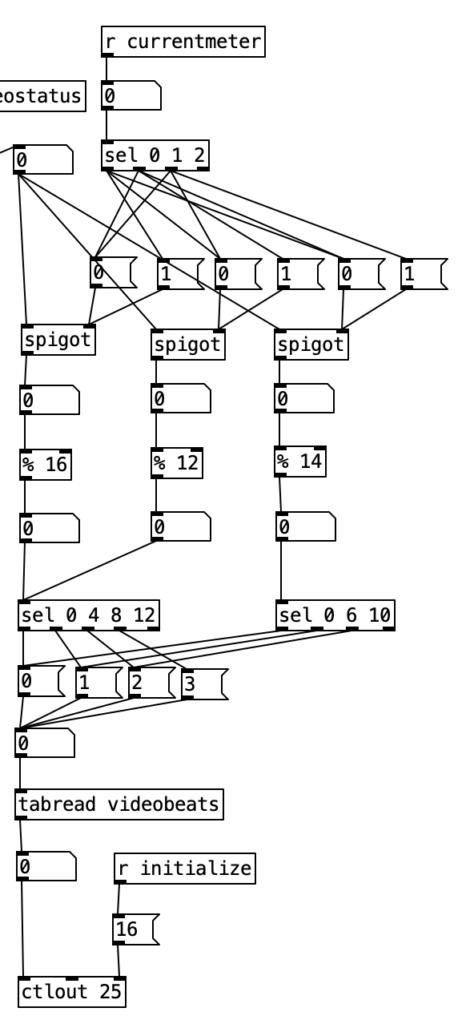

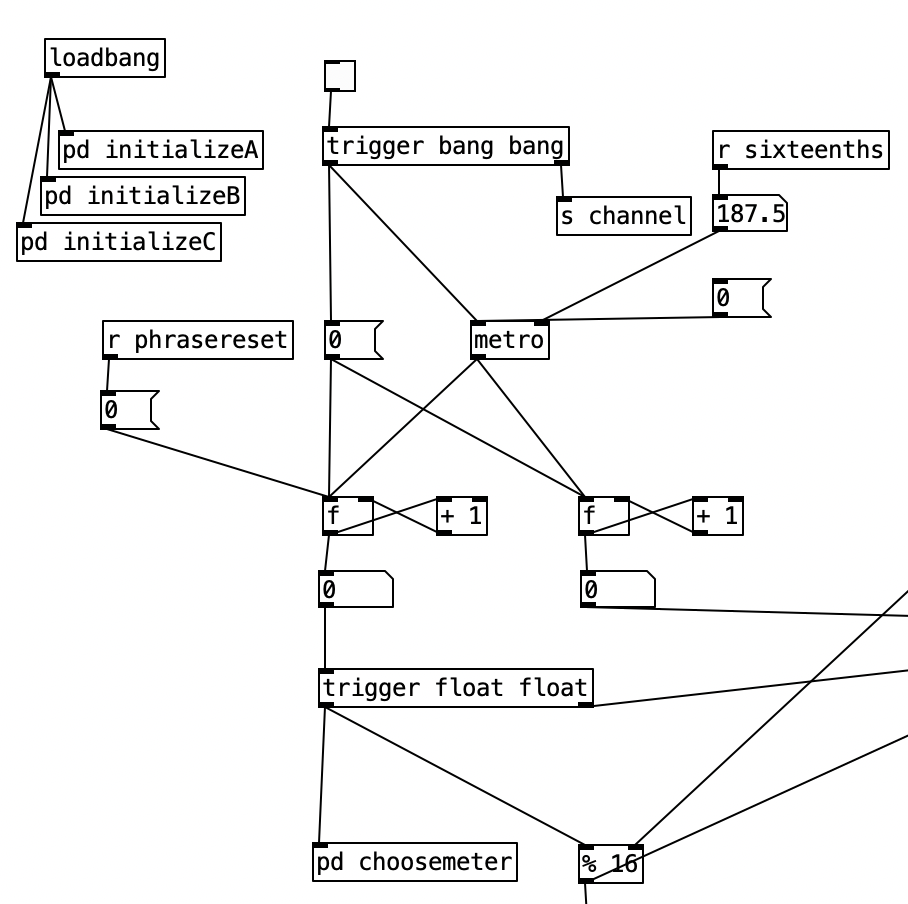

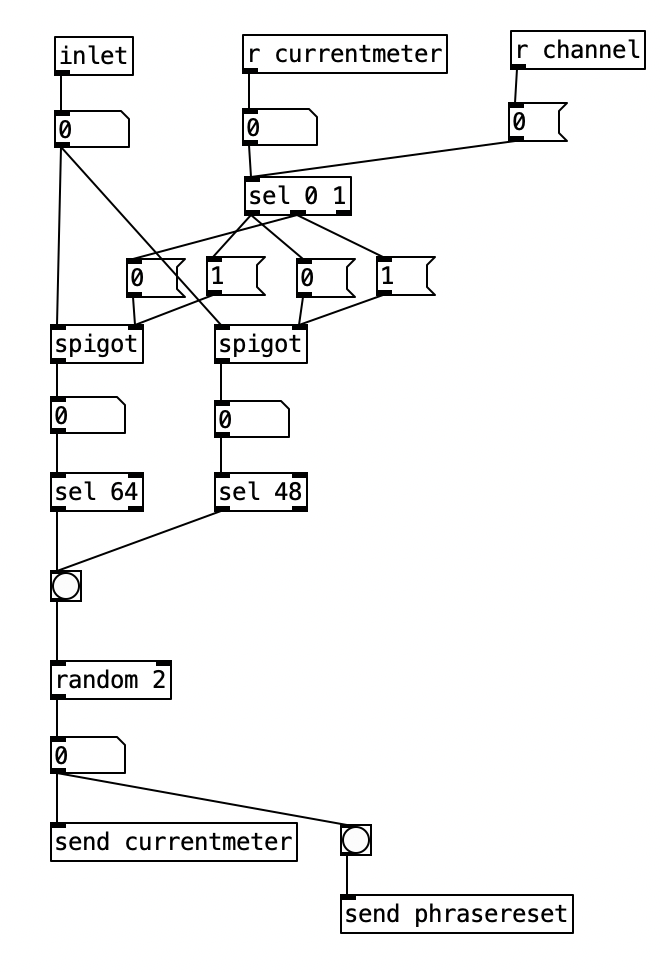

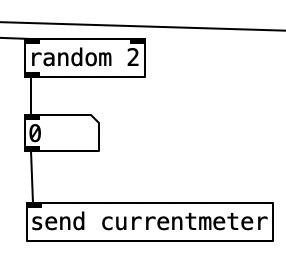

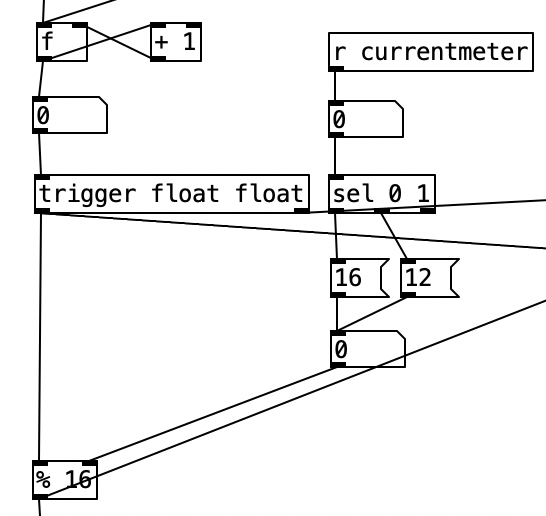

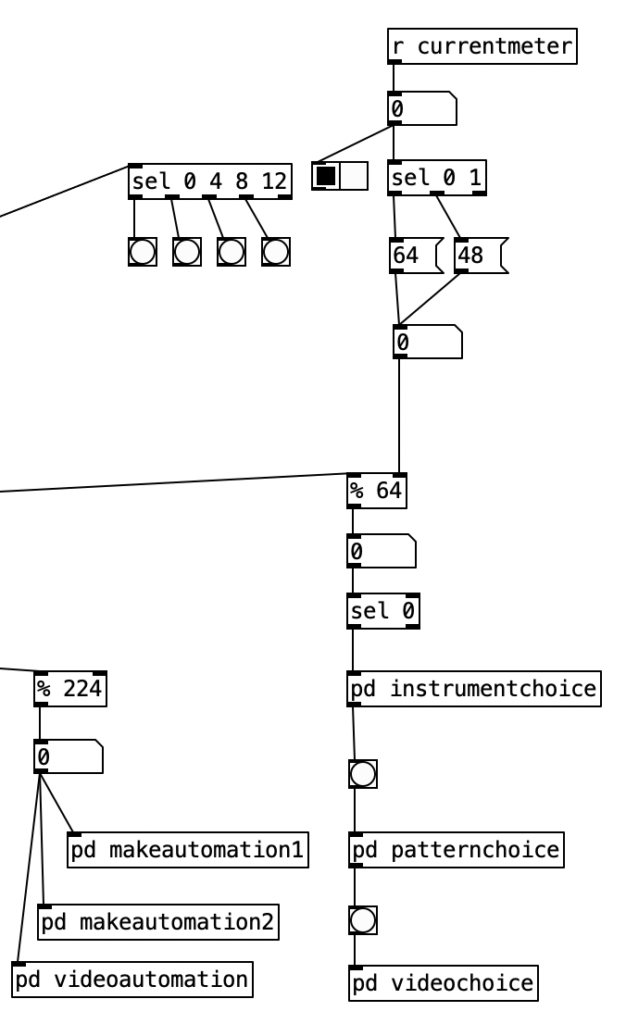

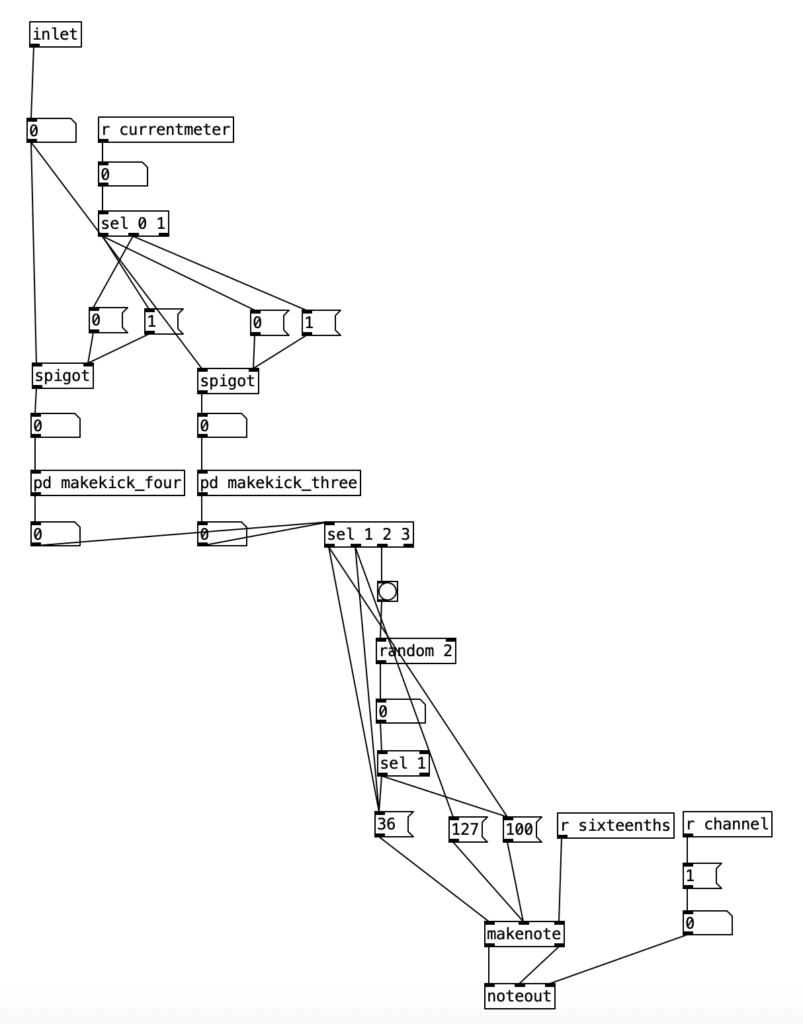

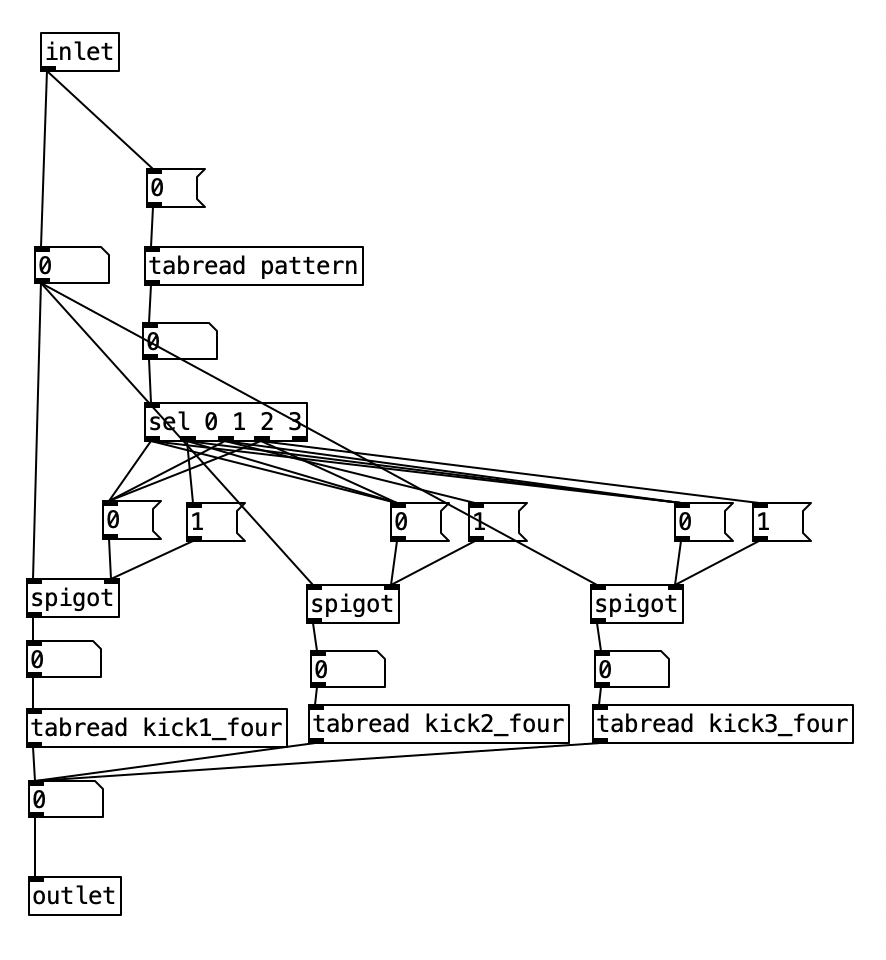

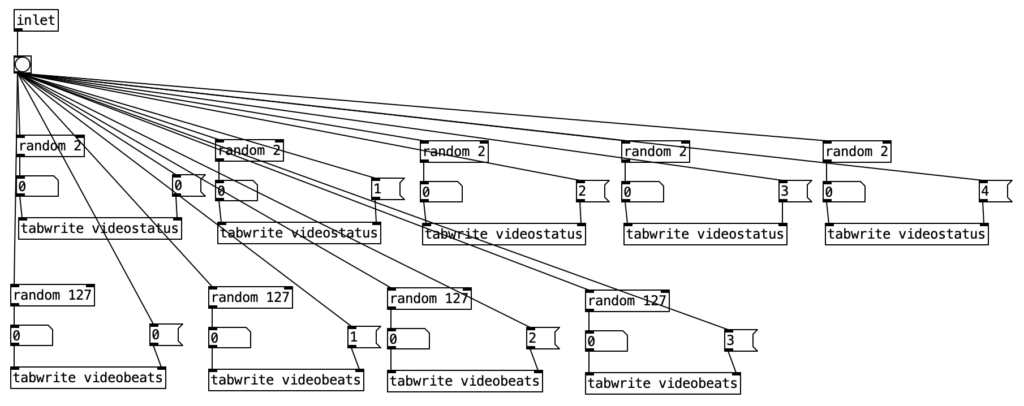

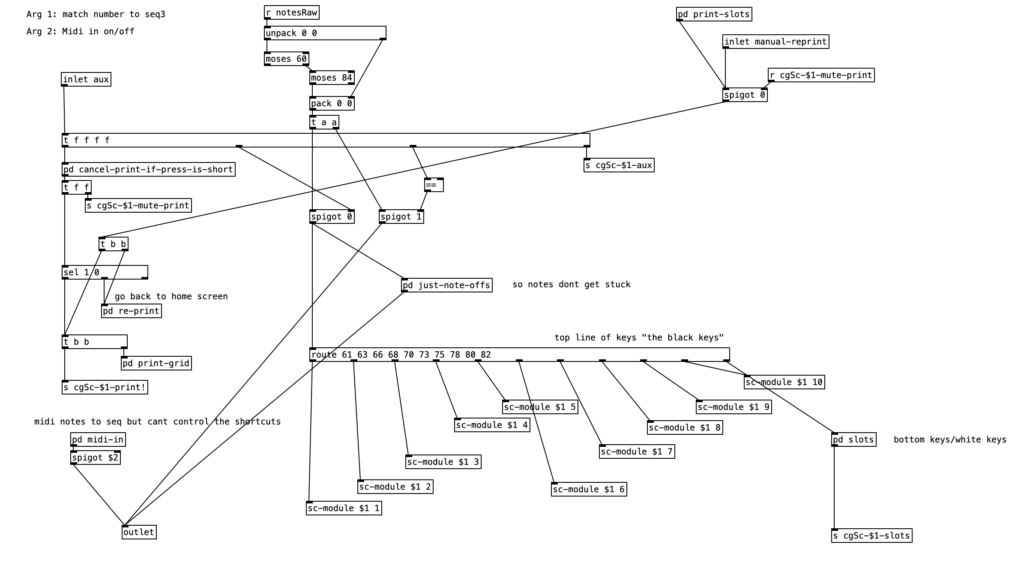

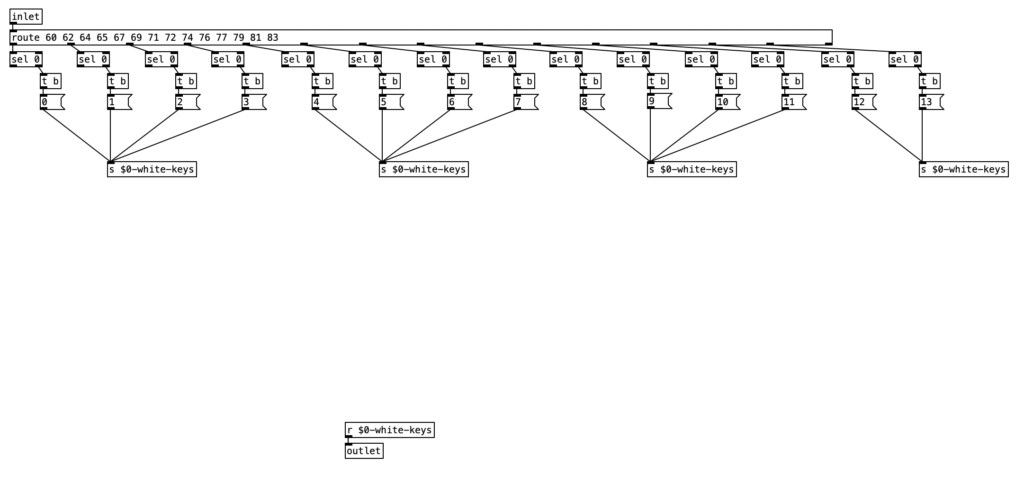

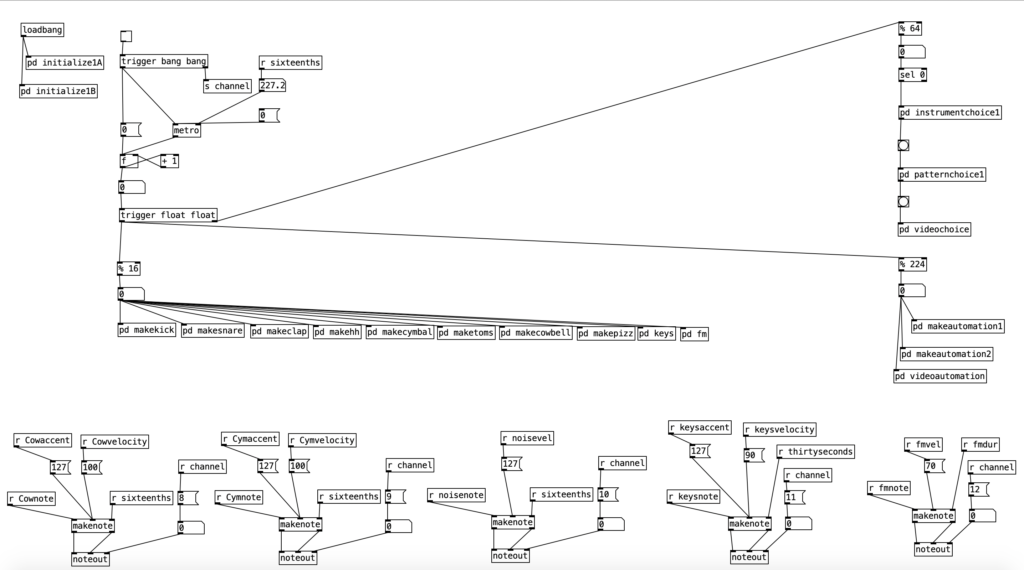

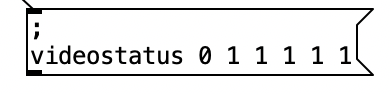

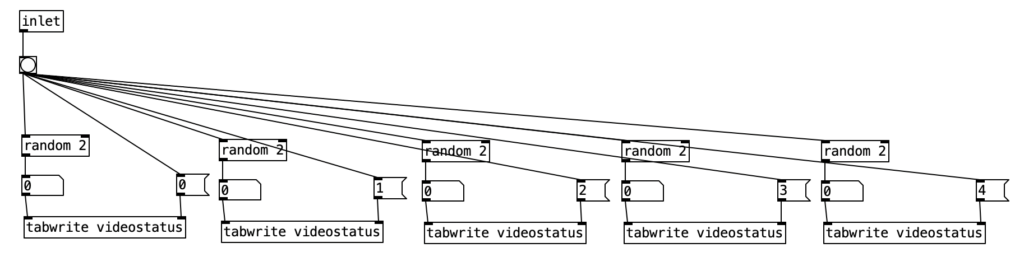

The PureData patch that was used to generate the accompaniment for this experiment was based upon the patch created for last month’s experiment. As a reminder, this algorithm randomly chooses between one of four musical meters, 4/4, 3/4, 7/8, and 5/8, at every new phrase. I altered this algorithm to fit a plan I have for six of the tracks on my next studio album, which will likely take three or four years to complete. Rather than randomly selecting them, I define an array of numbers that represent the meters that should be used in the order that they appear. At every phrase change I then move to the next value in the array, allowing the meters to change in a predetermined fashion.

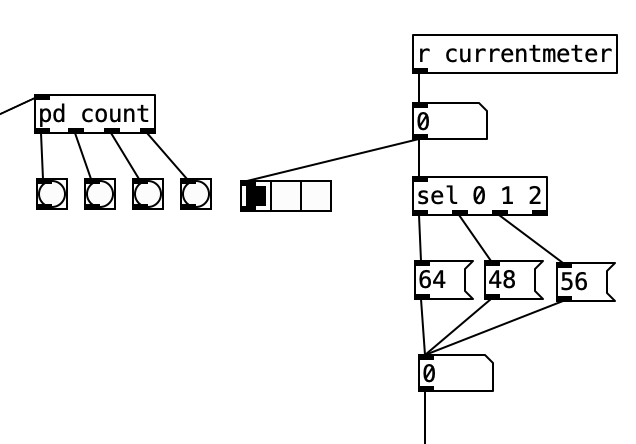

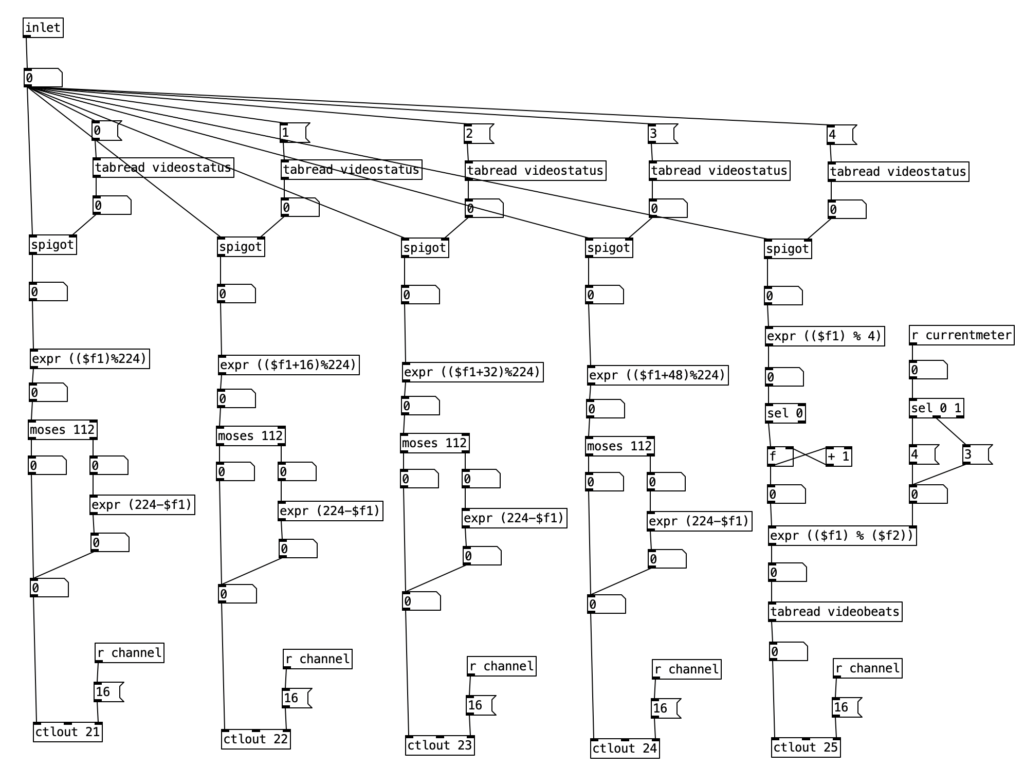

I put the piece of magic that allows this to happen in pd phrasechange. The inlet to this subroutine goes to a sel statement that contains the numbers of new phrase numbers expressed in sixteenth notes. When those values are reached a counter is incremented, a value from the table meter is read, which is sent to the variable currentmeter and the phrase is reset. This subroutine has four outlets. The first starts a blinking light that indicates that the piece is 1/3 finished, the second outlet starts a blinking light that starts when the piece is 2/3 of the way finished. The third outlet starts a blinking light that indicates the piece is on its final phrase. The fourth outlet stops the algorithm, bringing a piece to a close. Those blinking lights are on the right hand side of the screen, located near the buttons that indicate the current meter and the current beat. A performer can then, with some practice watch the computer screen to see what the current meter is, what the current beat is, and to have an idea of where you are in form of the piece.

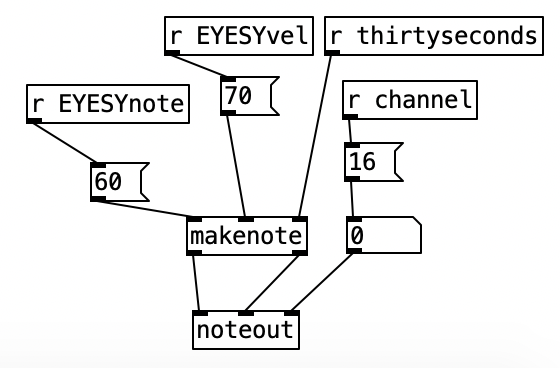

This month I created my first program for the EYESY, Basic Circles. The EYESY manual includes a very simple example of a program. However, it is too simple it just displays a circle. The circle doesn’t move, none of the knobs change anything, and the circle isn’t even centered. With very little work I was able to center the circle, and change it so that the size of the circle was controlled by the volume of the audio. Likewise, I was able to get knob four to control the color of the circle, and the fifth knob to control the background color.

However, I wanted to create a program that used all five knobs on the EYESY. I quickly came up with the idea of using knob two to control the horizontal positioning, and the third knob to control the vertical positioning. I still had one knob left, and only a simple circle in the middle of the screen to show for it. I decided to add a second circle, that was half the size of the first one. I used knob five to set the color for this second circle, although oddly it does not result in the same color as the background. Yet, this still was not quite visually satisfying, so I set knob one to set an offset from the larger circle. Accordingly, when knob one is in the center, the small circle is centered within the larger one. As you turn the knob to the left the small circle moves to the upper left quadrant of the screen. As you turn the knob to the right the smaller circle moves towards the lower right quadrant. This is simple, but offers just enough visual interest to be tolerable.

import os

import pygame

import time

import random

import math

def setup(screen, etc):

pass

def draw(screen, etc):

size = (int (abs (etc.audio_in[0])/100))

size2 = (int (size/2))

position = (640, 360)

color = etc.color_picker(etc.knob4)

color2 = etc.color_picker(etc.knob5)

X=(int (320+(640*etc.knob2)))

X2=(int (X+160-(310*etc.knob1)))

Y=(int (180+(360*etc.knob3)))

Y2=(int (Y+90-(180*etc.knob1)))

etc.color_picker_bg(etc.knob5)

pygame.draw.circle(screen, color, (X,Y), size, 0)

pygame.draw.circle(screen, color2, (X2,Y2), size2, 0)While the program, listed above, is very simple, it was my first time programming in Python. Furthermore, targeting the EYESY is not the simplest thing to do. You have plug a wireless USB adapter into the EYESY, connect to the EYESY via a web browser, upload your program as a zip file, unzip the file, and then delete the zip file. You then have to restart the video on the EYESY to see if the patch works. If there is an error in your code, the program won’t load, which means you cannot trouble shoot it, you just have to look through your code line by line and figure it out. Although, I learned to use an online Python compiler to check for basic errors. If you have a minor error in your code the EYESY will sometimes load the program and display a simple error message onscreen, which will allow you to at least figure where the error is.

I’m very pleased with the backing track, and given that it is my first program for the EYESY, with the visuals. I’m not super pleased with the audio from the Organelle. Some of this is due to my playing. For this experiment I used a very limited set of pitches in my improvisation, which made the fingering easier than it has been in other experiments. Also, I printed out a fingering chart and kept it in view as I played. Part of it is due to my lack of rhythmic accuracy. I am still getting used to watching the screen in PureData to see what meter I am in and what the current beat is. I’m sure I’ll get the hang of it with some practice.

One fair question to ask is do I continue to use the WARBL to control the Organelle if I consistently find it so challenging? The simple answer is that consider a wind controller to be the true test of the expressiveness of a digital musical instrument. I should be able to make minute changes to the sound by slight changes in breath pressure. After working with the Organelle for nine months, I can say that it fails this test. The knobs on the Organelle seem to quantize at a low resolution. As you turn a knob you are changing the resistance in a circuit. The resulting current is then quantized, that is its absolute value is rounded to the nearest digital value. I have a feeling that the knobs on the Organelle quantize to seven bits in order to directly correspond to MIDI, which is largely a seven bit protocol. Seven bits of data only allow for 128 possible values. Thus, we hear discrete changes rather than continual ones as we rotate a knob. For some reason I find this short coming is amplified rather than softened when you control the Organelle with a wind controller. At some point I should do a comparison where I control a PureData patch on my laptop using the WARBL without using the Organelle.

I recorded this experiment using multichannel recording, and I discovered something else that is disappointing about the Organelle. I found that there was enough background noise coming from the Organelle that I had to use a noise gate to clean up the audio a bit. In fact, I had to set the threshold at around -35 dB to get rid of the noise. This is actually pretty loud. The Volca Keys also requires a noise gate, but a lower threshold of -50 or -45 dB usually does the trick with it.

Perhaps this noise is due to a slight ground loop, a small short in the cable, RF interference, or some other problem that does not originate in the Organelle, but it doesn’t bode well. Next month I may try another FM or additive instrument. I do certainly have a good head start on the EYESY patch for next month.