For this month’s experiment I created a sample player that triggers bass harmonica samples. I based the patch off of Lo-Fi Sitar by a user called woiperdinger. This patch was, in turn, based off of Lo-Fi Piano by Henr!k. According to this user, this was based upon a patch called Piano48 by Critter and Guitari.

The number 48 in the title refers to the number of keys / samples in the patch. Accordingly, my patch has the potential for 48 different notes, although only 24 notes are actually implemented. This is due to the fact that the bass harmonica I used to create the sample set only features 24 pitches. I recorded the samples using a pencil condenser microphone. I pitch corrected each note (my bass harmonica is fairly out of tune at this point), and I EQed each note to balance the volumes a bit. Initially I had problems as I had recorded the samples at a higher sample rate (48kHz) than the Organelle operates at (44.1kHz). This resulted in the pitch being higher than planned, but it was easily fixed by adjusting the sample rate on each sample. I had planned on recording the remaining notes using my Hohner Chromonica 64, but I ran out of steam. Perhaps I will expand the sample set in a future release.

The way this patch works is that every single note has its own sample. Furthermore, each note is treated as its own voice, such that my 24 note bass harmonica patch allows all 24 notes to be played simultaneously. The advantage of having each note have its own sample is that each note will sound different, allowing the instrument to sound more naturalistic. For instance the low D# in my sample set is really buzzy sounding, because that note buzzes on my instrument. Occasionally hitting a note with a different tone color makes it sound more realistic. Furthermore, none of the samples have to be rescaled to create other pitches. Rescaling a sample either stretches the time out (for a lower pitch) or squashes the time (for a higher pitch), again creating a lack of realism.

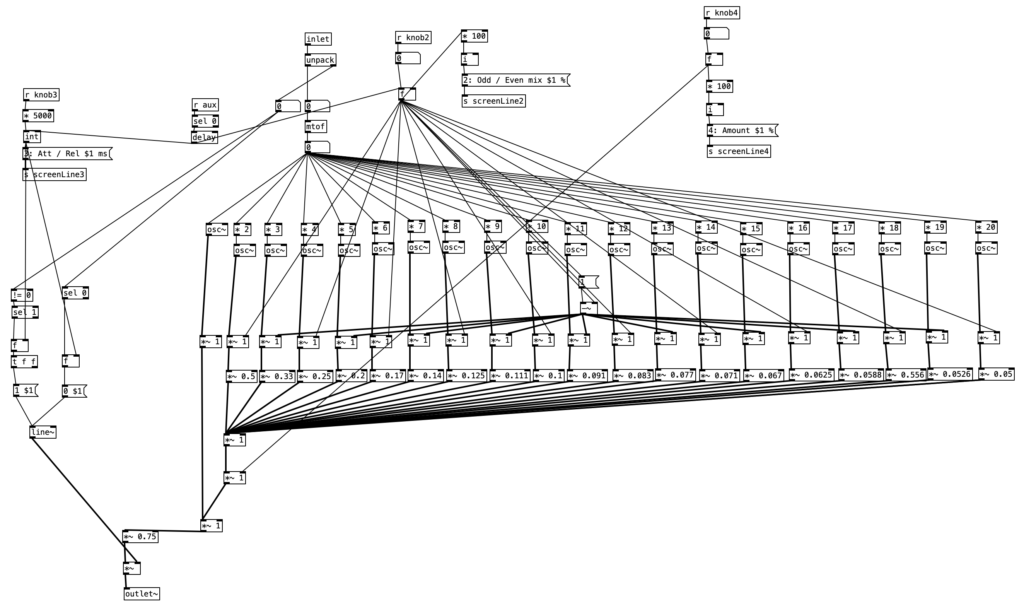

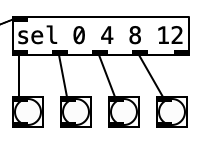

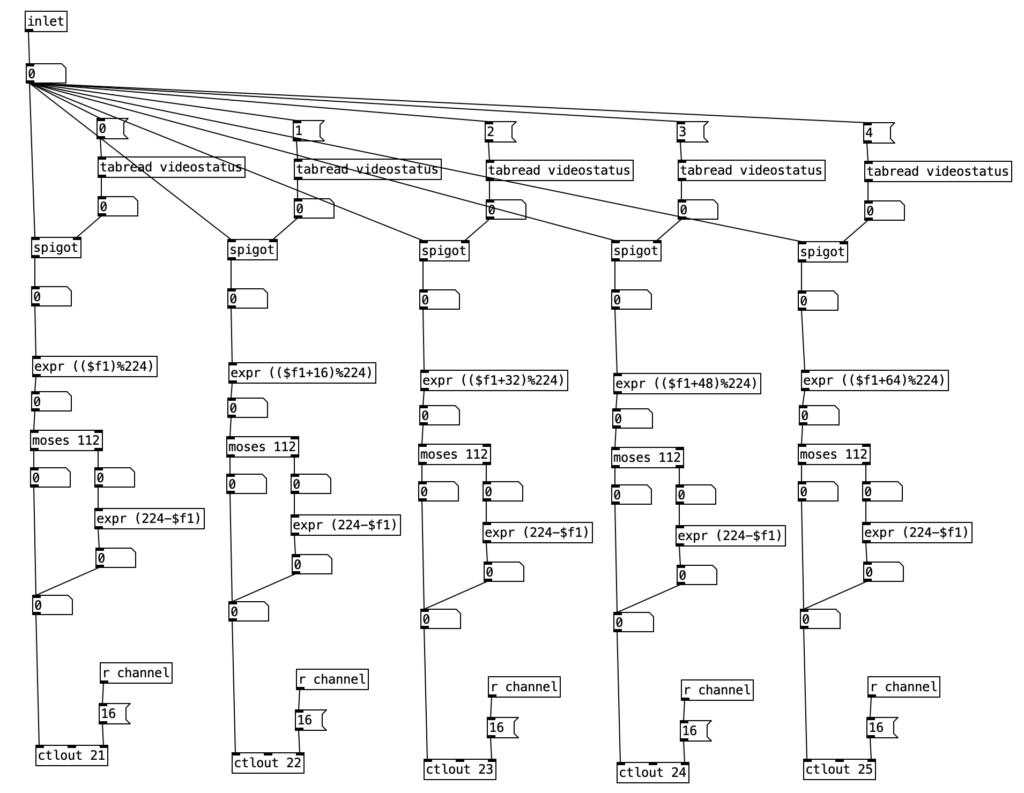

The image above looks like 48 individual subroutines, but it is actually 48 instantiations of a single abstraction. The abstraction is called sampler-voice, and each instance of this abstraction is passed the file name of the sample along with the key number that triggers the sample. The key numbers are MIDI key numbers, where 60 is middle C, and each number up or down is one chromatic note away (so 61 would be C#).

Inside sampler-voice we see basically two algorithms, one that executes when the abstraction loads (using loadbang), and one that runs while the algorithm is operating. If we look at the loadbang portion of the abstraction, we see that it uses the object soundfiler to load the given sample into an array. This sample is then displayed in the canvas to the left. Soundfiler sends the number of samples to its left outlet. This number is then divided by 44.1 to yield the time in milliseconds.

As previously stated, the balance of the algorithm operates while the program is running. The top part of the algorithm, r notes, r notes_seq, r zero_notes, listens for notes. The object stripnote is being used to isolated the MIDI note number of the given event. This is then passed through a mod (%) 48 object as the instrument itself only has 48 notes. I could have changed this value to 24 if I wanted every sample to recur once every two octaves. The object sel $2 is then use to filter out all notes except the one that should trigger the given sample ($2 means the second value passed to the abstraction). The portion of the algorithm that reads the sample from the array is tabread4~ $1-array.

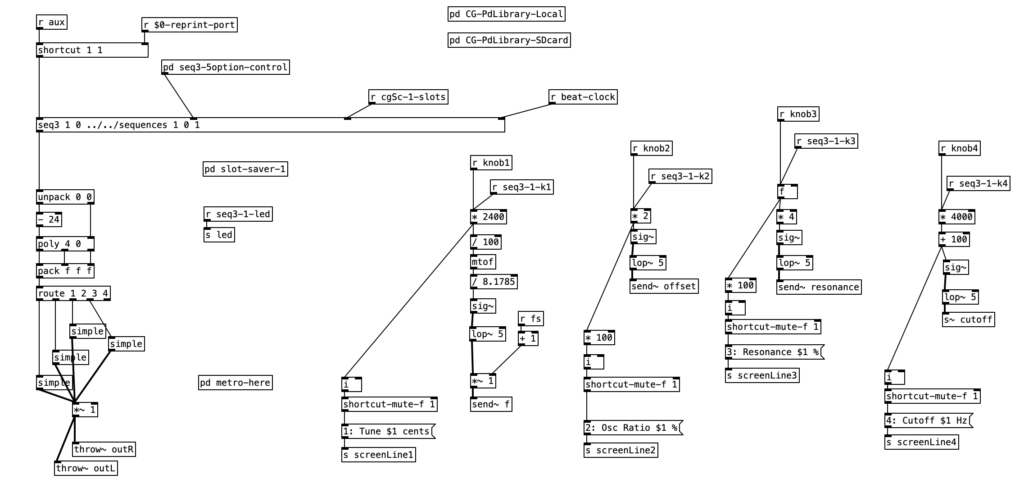

Knob 1 of the Organelle is used to control the pitch of the instrument (in case you want to transpose the sample). The second knob is used to control both the output level of the reverb as well as the mix between the dry (unprocessed) sound and the wet (reverberated) sound. In Lo-Fi Sitar, these two parameters each had their own knob. I combined them to allow for one additional control. Knob 3 is a decay control that can be used if you don’t want the whole sample to play. The final knob is used to control volume, which is useful when using a wind controller, such as the Warbl, as that can be used to allow the breath control to control the volume of the sample.

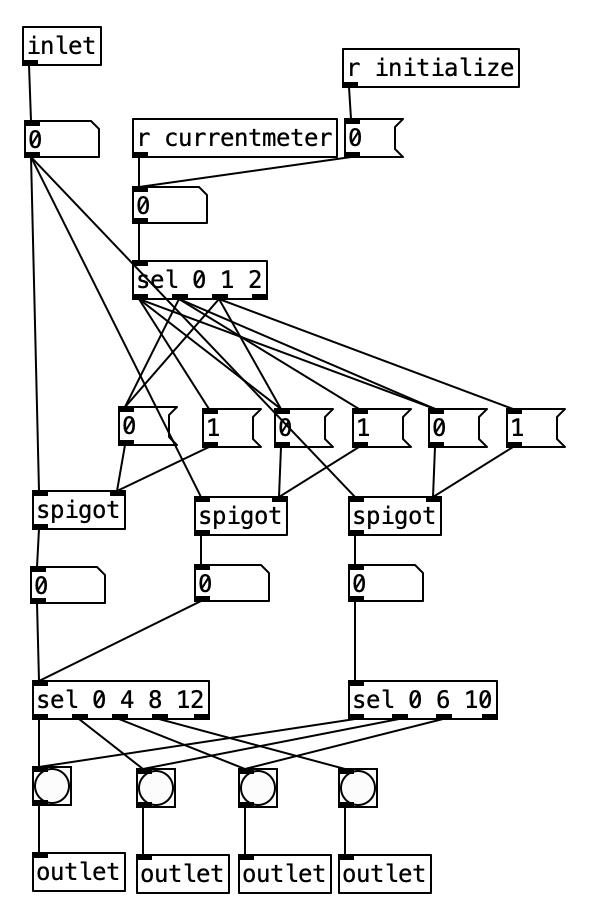

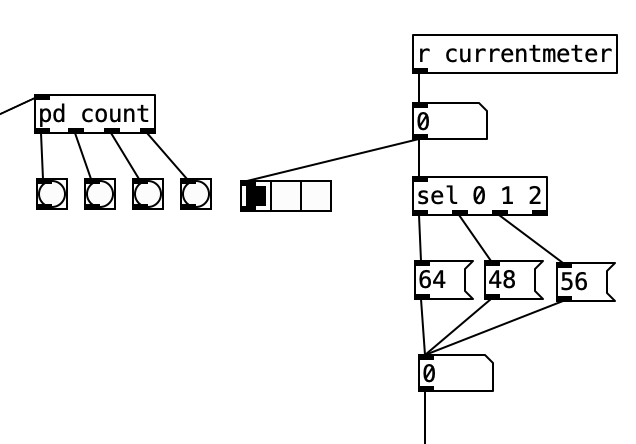

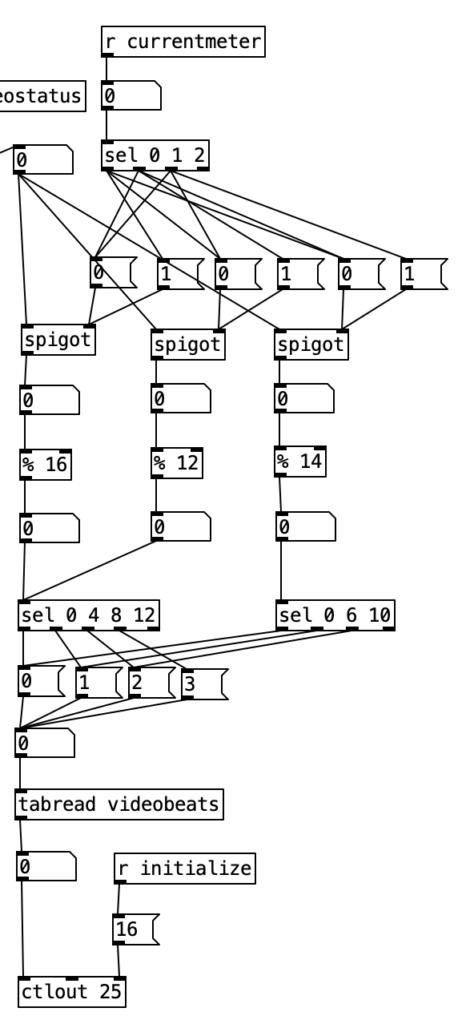

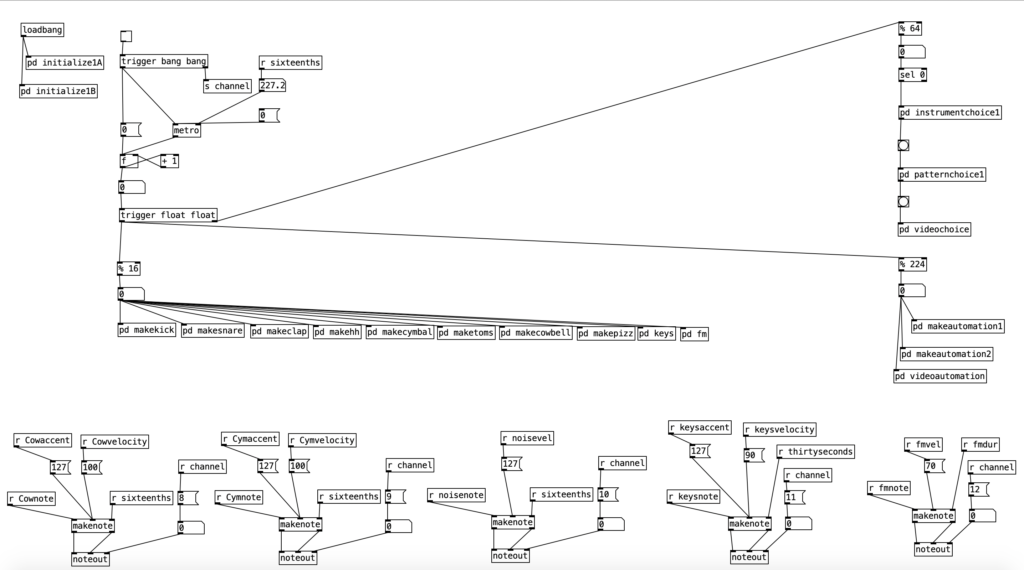

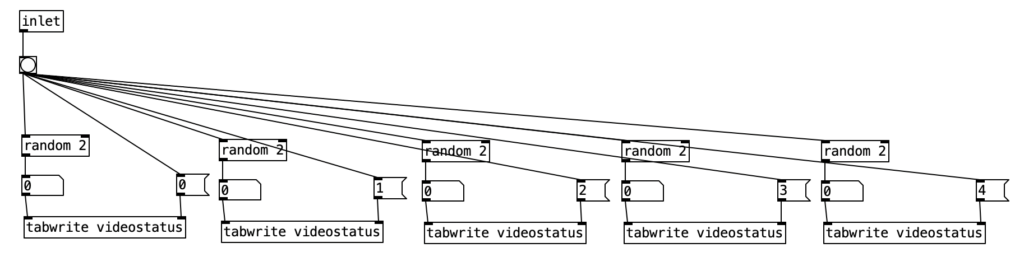

The PureData patch that controls the accompaniment is basically the finished version of the patch I’ve been expanding through this grant project. In addition to the previously used meters 4/4, 3/4, and 7/8, I’ve added 5/8. I’d share information about the algorithm itself, but it is just more of the same. Likewise, I haven’t done anything new with the EYESY. I’m hoping next month I’ll have the time to tweak an existing program for EYESY, but I just didn’t have the time to do that this month.

I should have probably kept the algorithm at a slower tempo, as I think the music works better at a slower tempo. The bass harmonica samples sound fairly natural, except for when the Organelle seems to accidentally trigger multiple samples at once. I have a theory that the Warbl uses a MIDI On message with a velocity of 0 to stop notes, which is an acceptable use of a MIDI On message, but that PureData uses it to trigger a note. If this is the case, I should be able to fix it in the next release of the patch.

It certainly sounds like I need to practice my EVI fingering more, but I found the limited pitch range (two octaves) of the sample player made the fingering easier to keep track of. Since you cannot use your embouchure with an EVI, you use range fingerings in order to change range. With the Warbl, your right hand (lower hand) is doing traditional valve fingerings, while your left hand (upper hand) is doing fingerings based upon traditional valve fingerings to control what range you are playing on. Keeping track of how the left hand affects the notes being triggered by the right hand has been my stumbling block in terms of learning EVI fingering. However, a two octave range means you really only need to keep track of four ranges. I found the breath control of the bass harmonica samples to be adequate. I think I’d really have to spend some time calibrating the Warbl and the Organelle to come up with settings that will optimize the use of breath control. Next month I hope to create a more fanciful sample based instrument, and maybe move forward on programming for the EYESY.